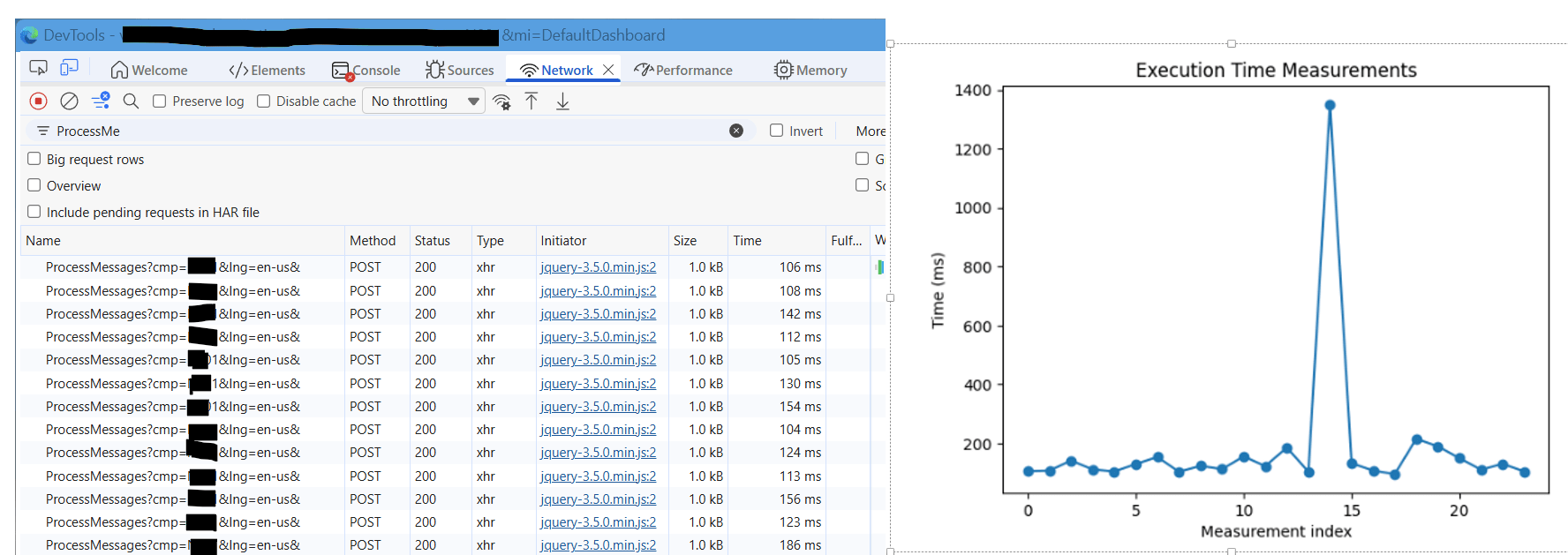

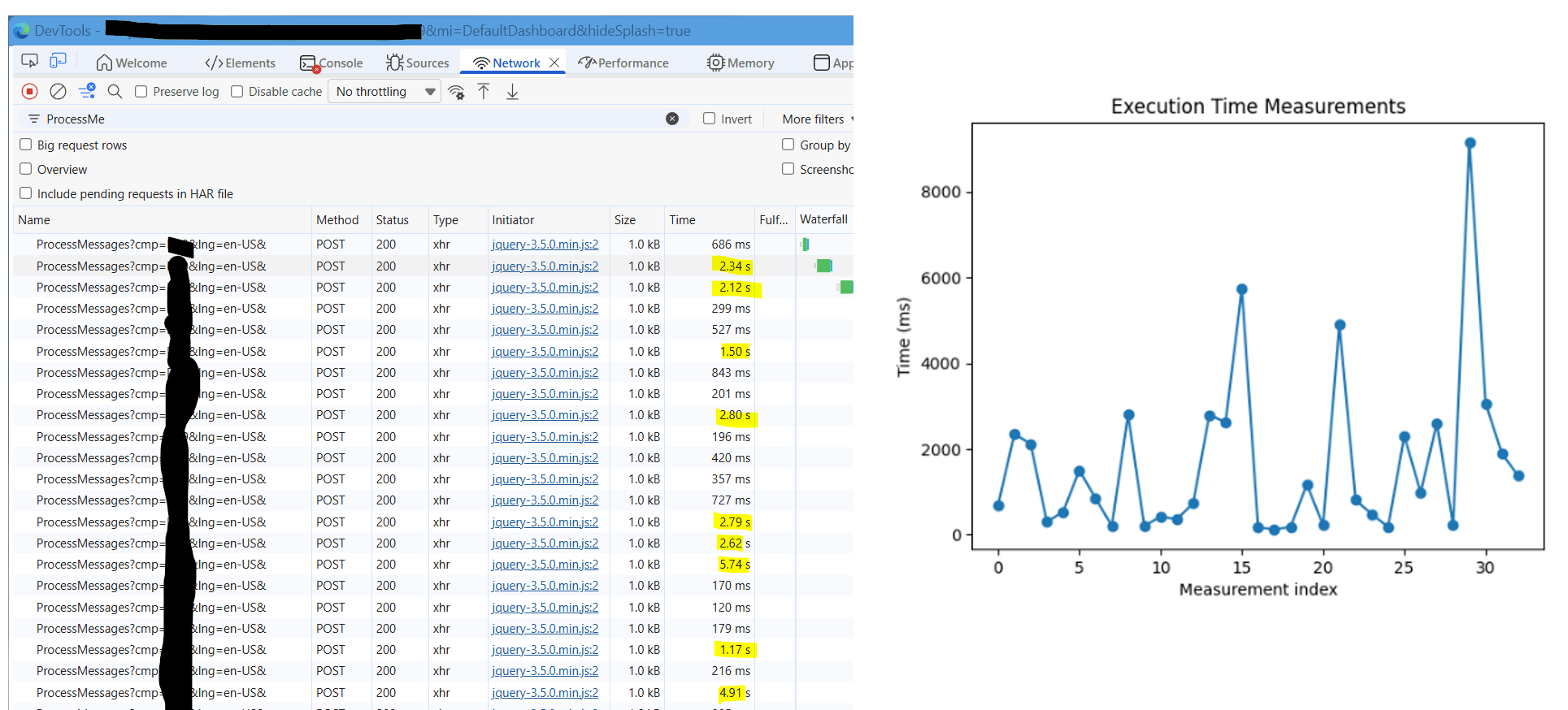

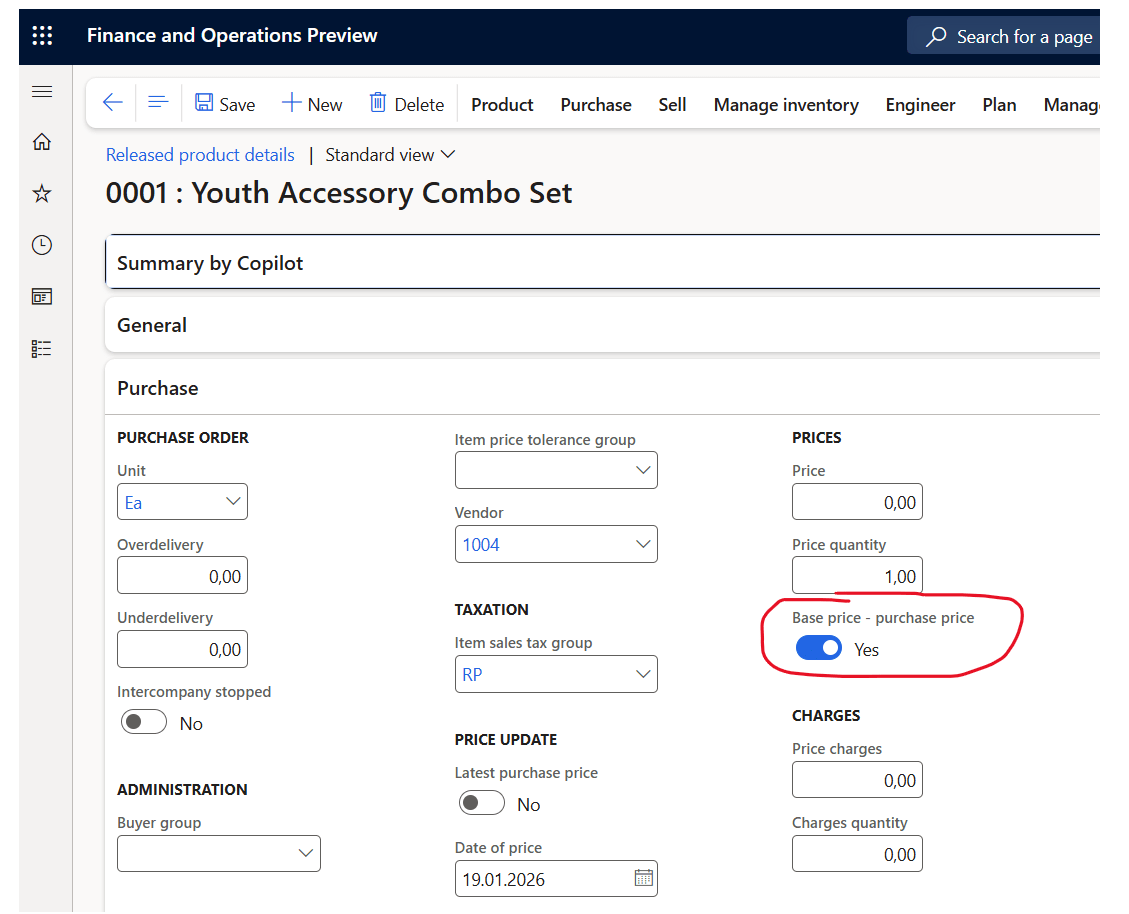

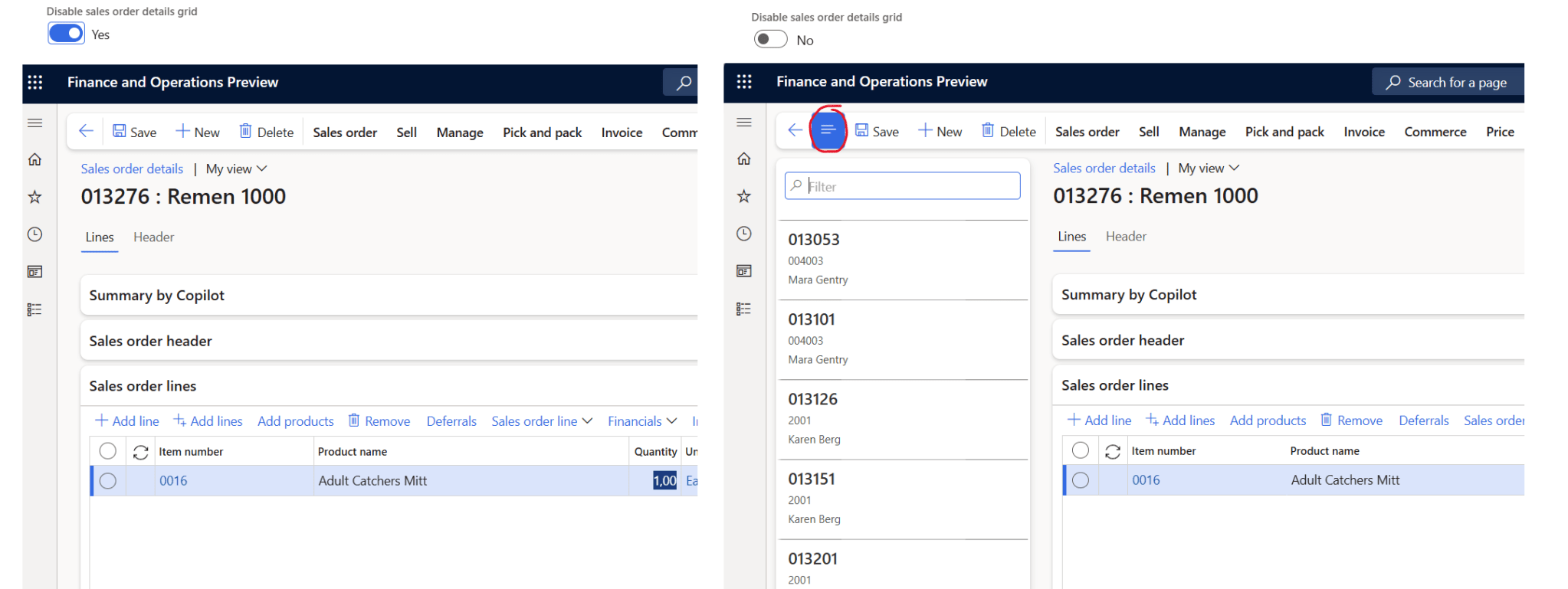

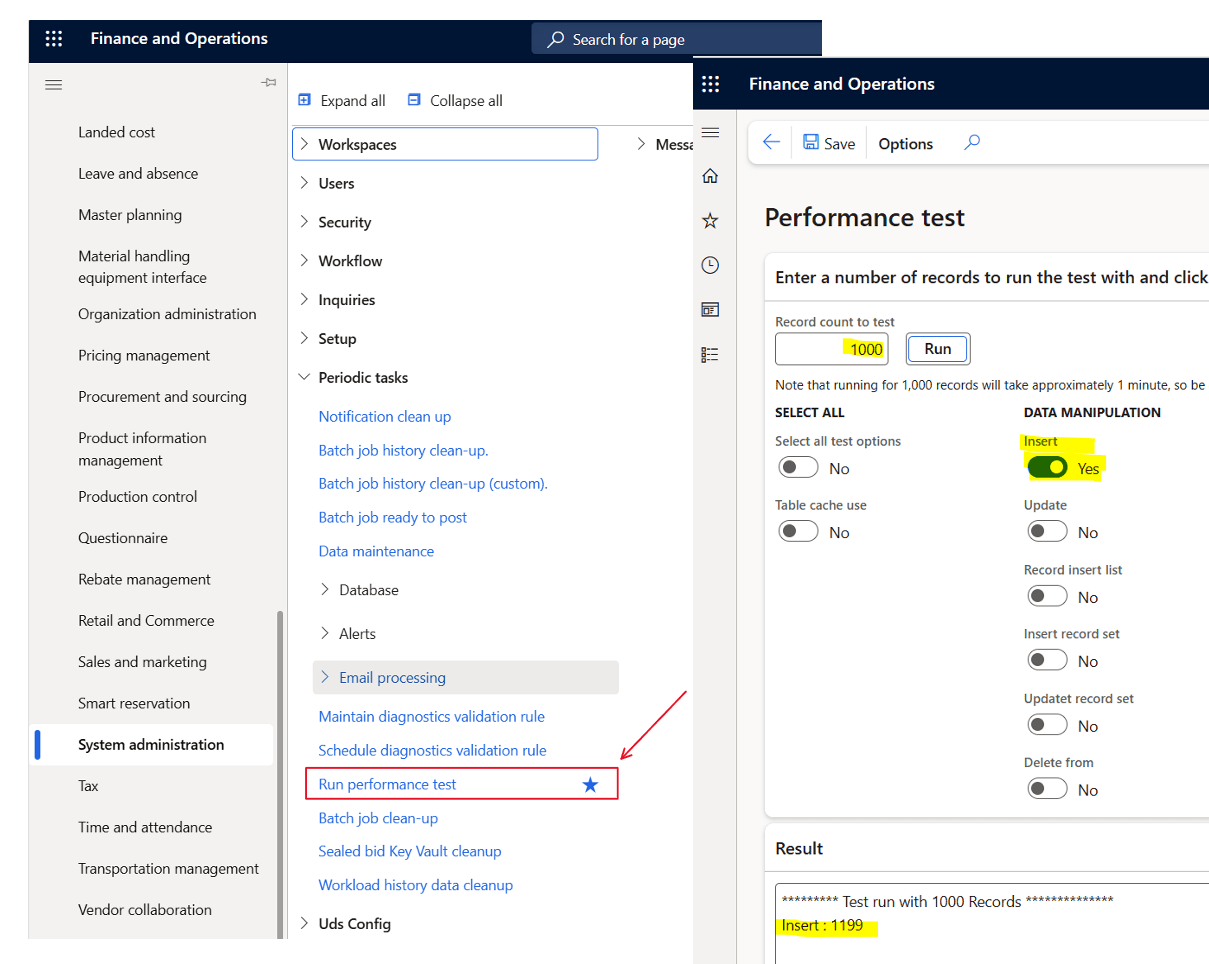

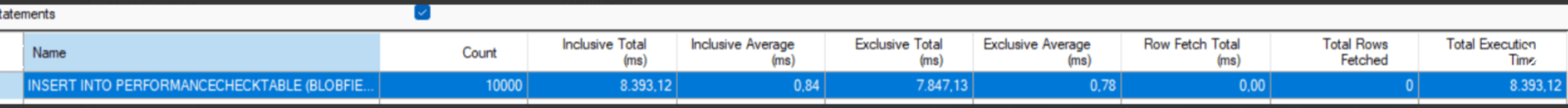

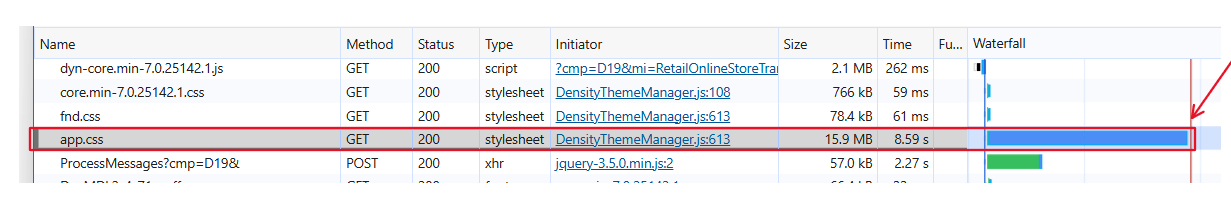

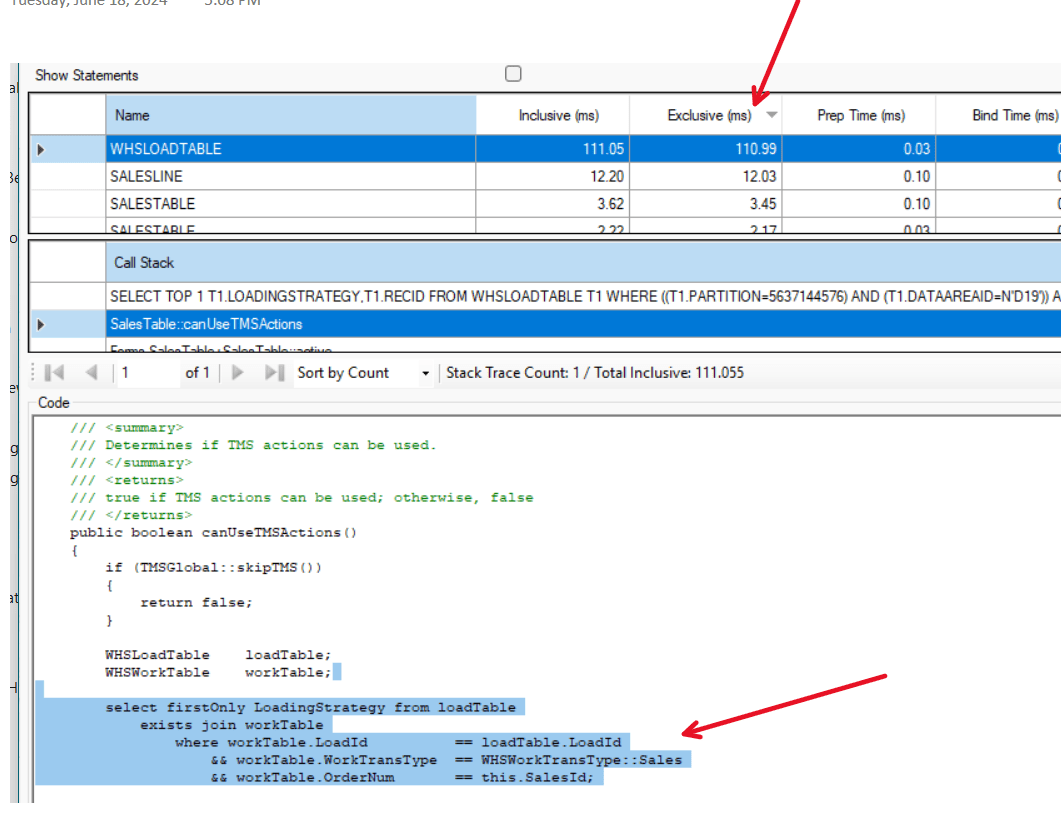

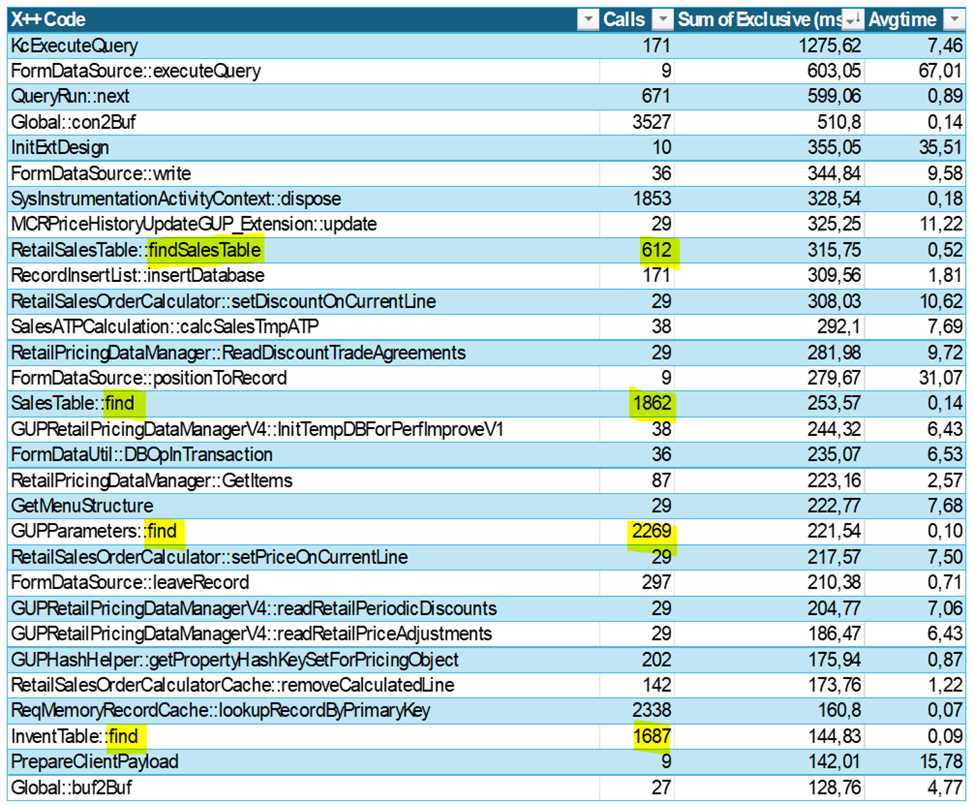

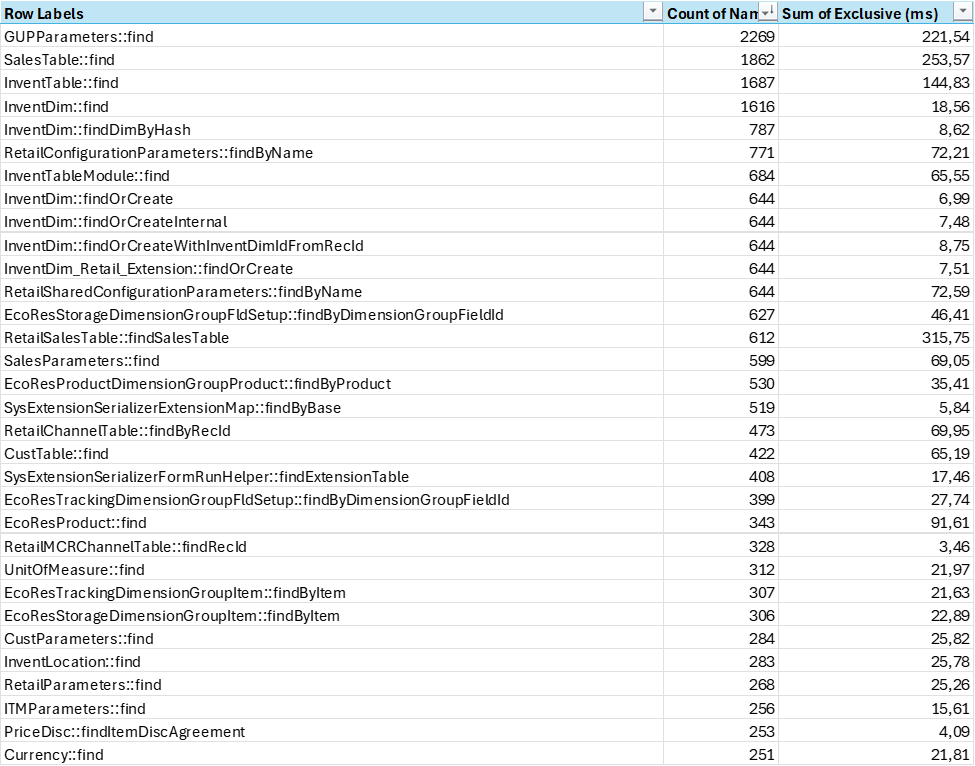

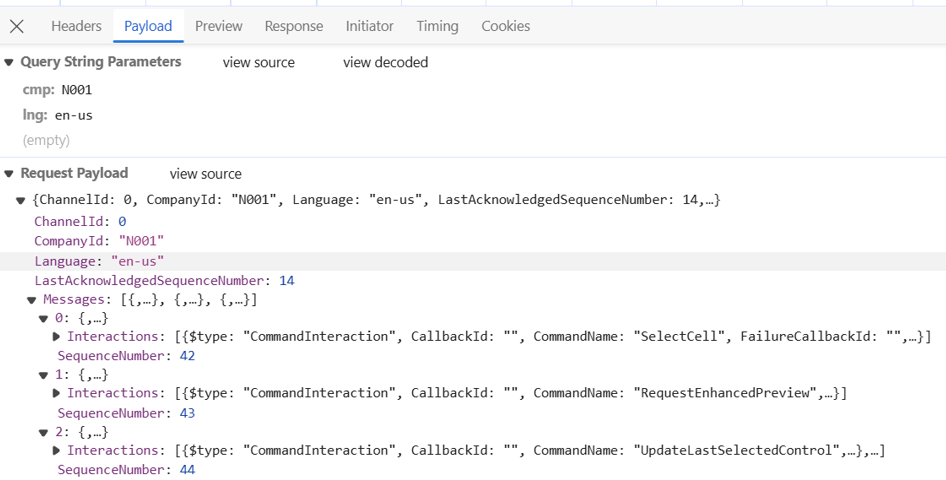

I see several are struggling with doing copy/paste of sales order lines, especially if the orders are large and there are complex logics for SCM and pricing. Based on how you set up D365, you will see examples where pasting a sales line can take from 1.5s and also beoynd 6s per line. And there are also much worse examples.

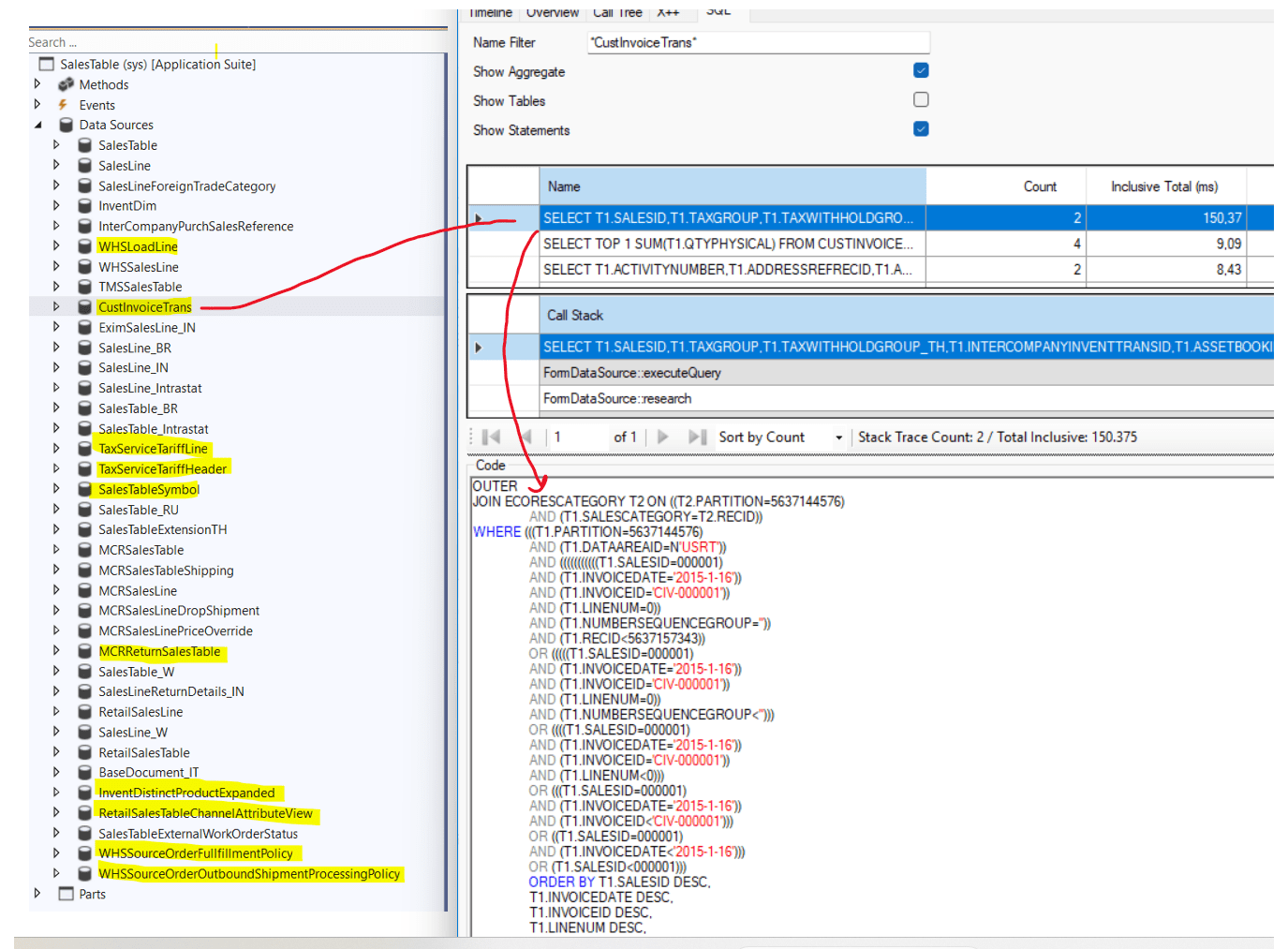

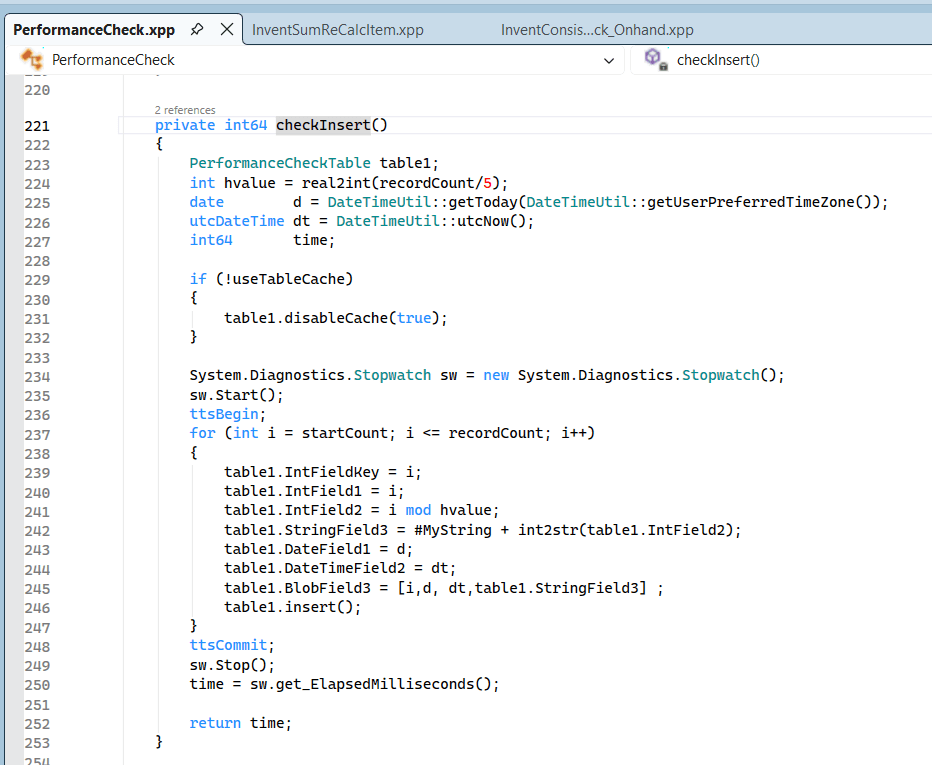

I have really gone into all code and SQL statements happening, and there are a LOT happening.

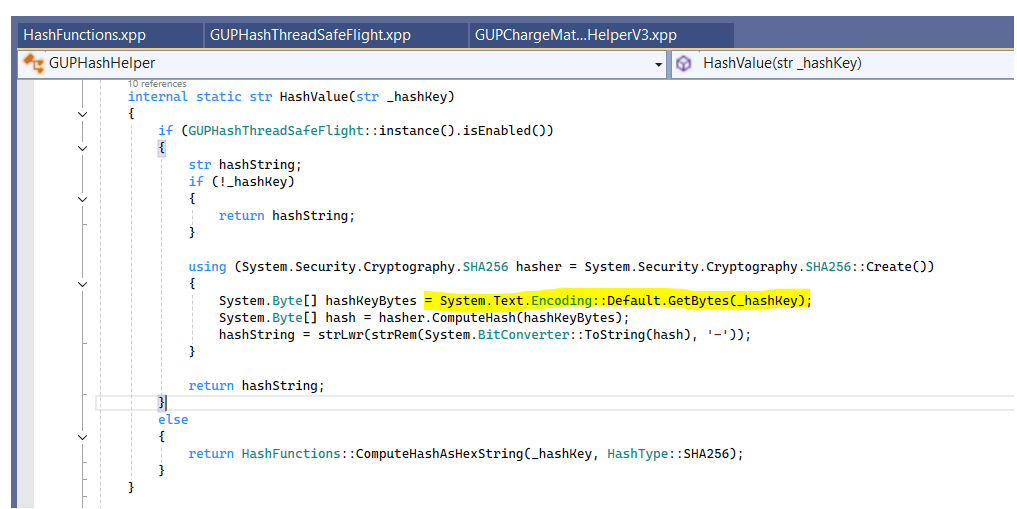

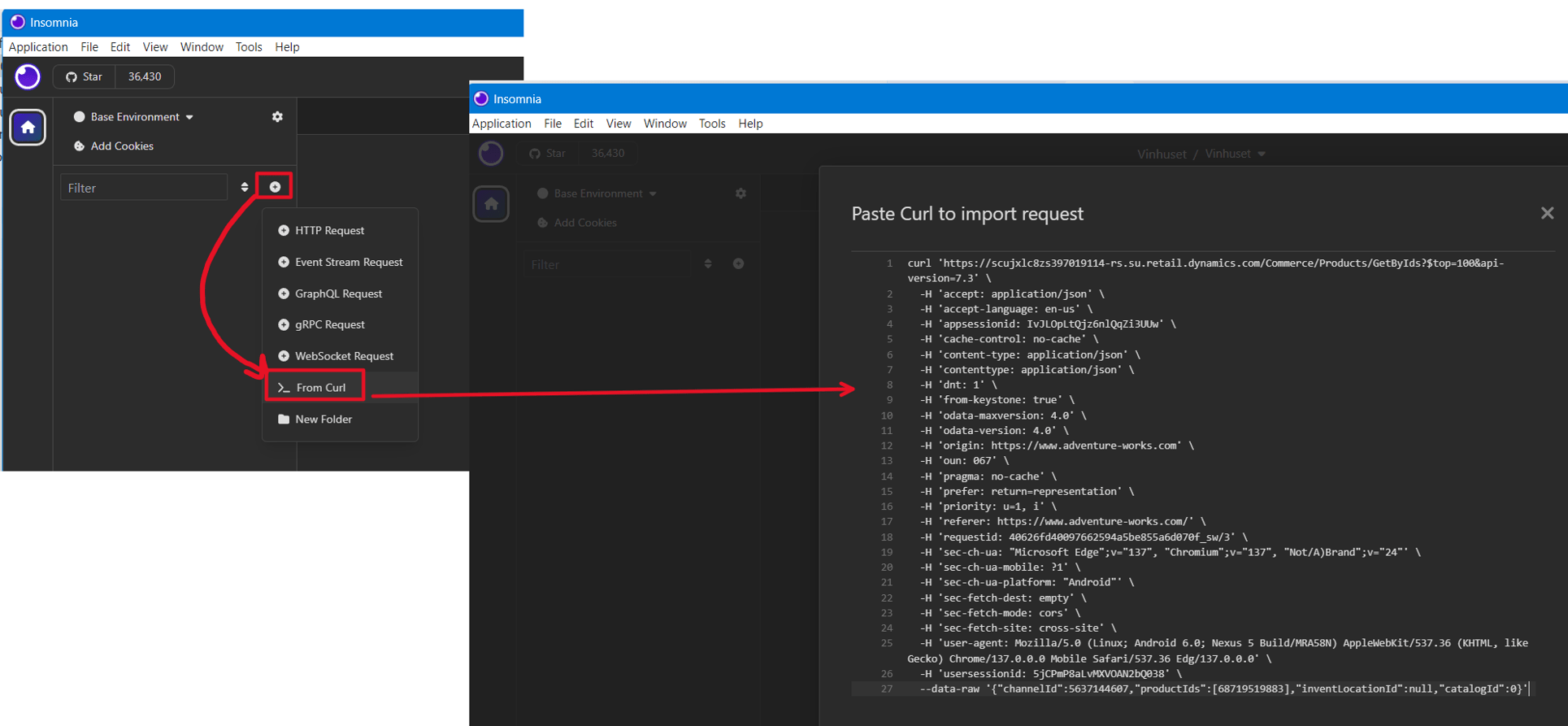

So I asked my-self, can I with AI do better ? Should I try a few minutes with “Vibe coding” ?

My idea is to not paste into the grid, but rather paste into a text field, and then have a single class create the sales lines within the same TTS.

So I asked ChatGPT for it. After a few iterations it actually created the class that does exactly that. I just needed to add a menu item, and then add it to the sales lines form.

Here are the solution it created:

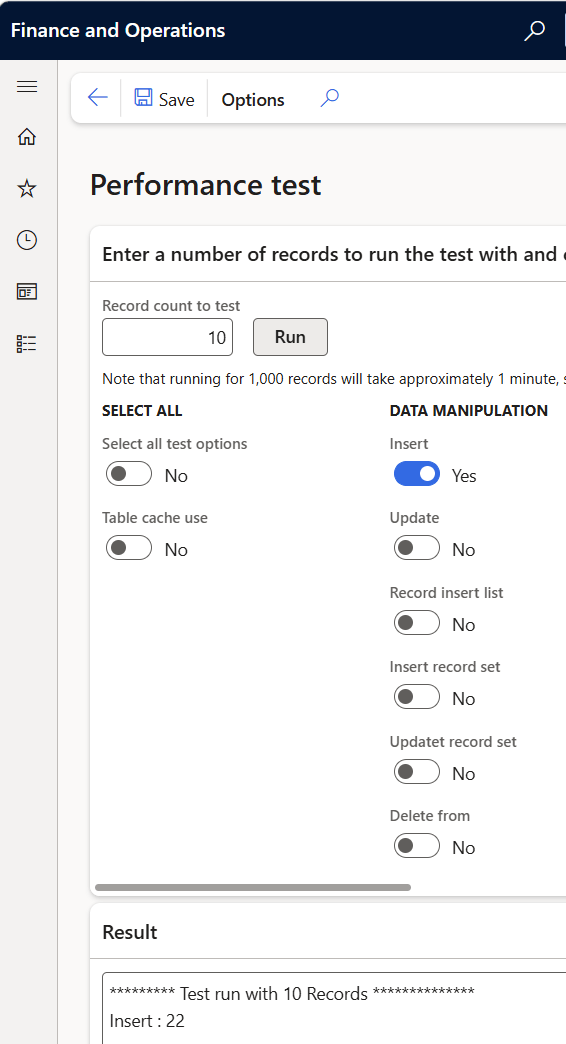

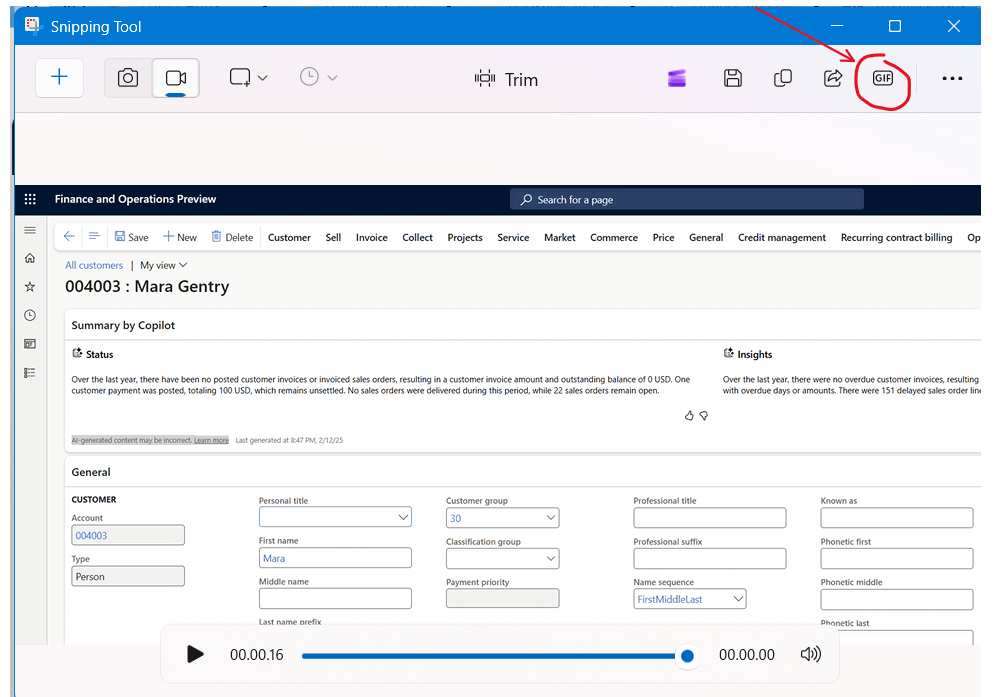

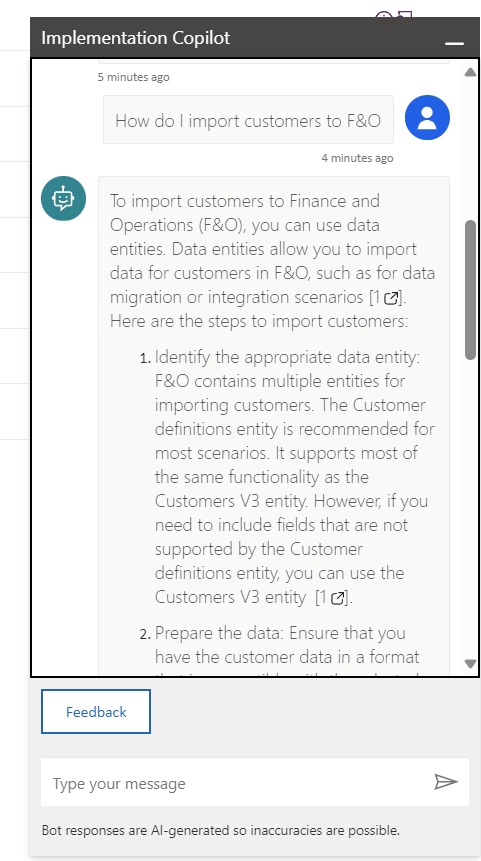

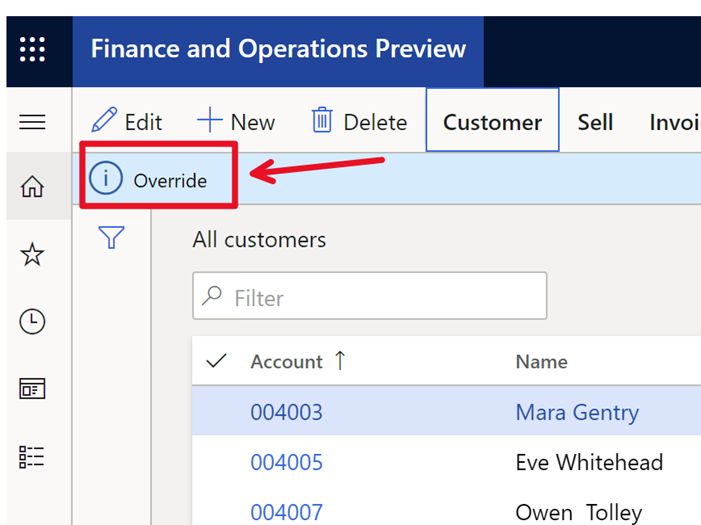

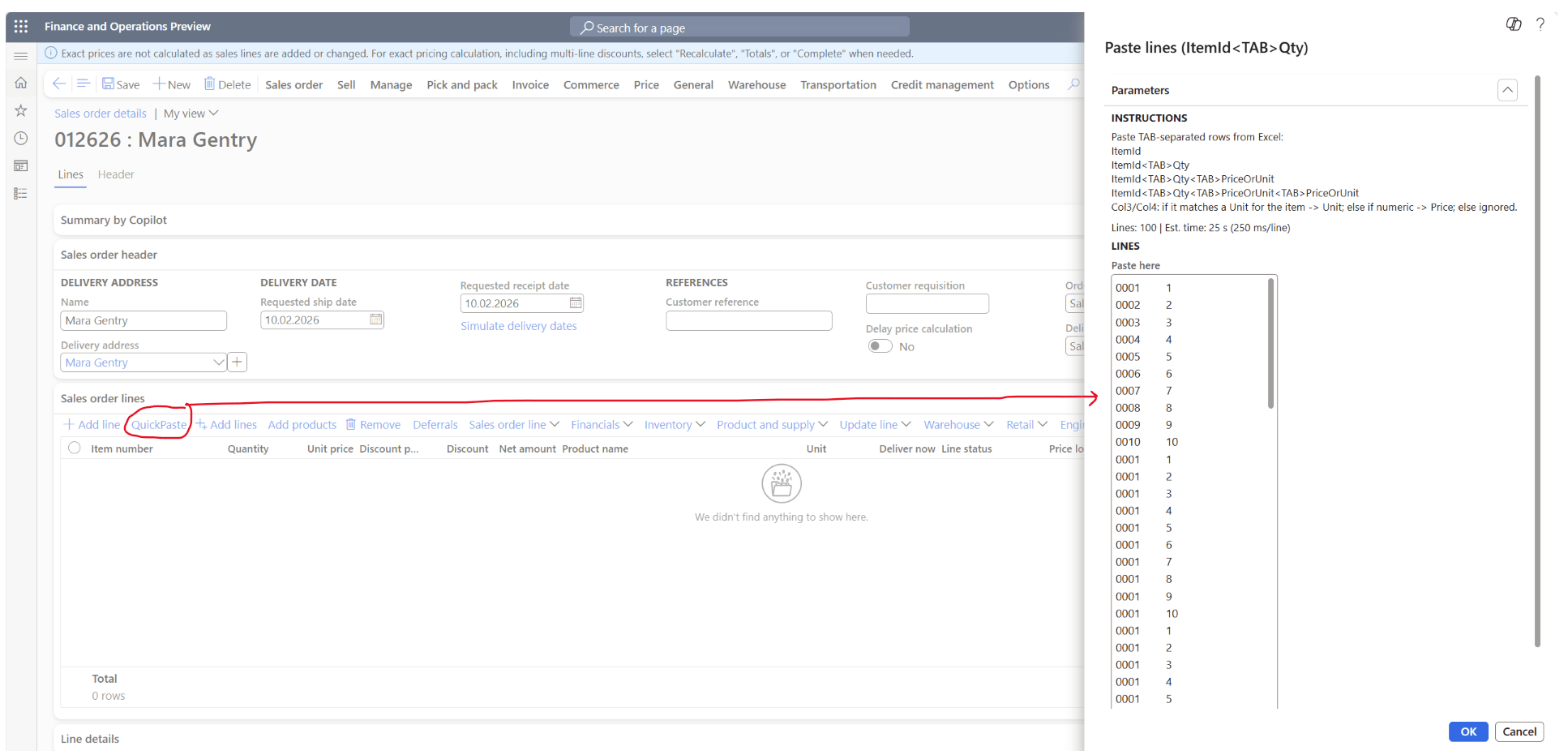

We have a “QuickPaste” button on the sales order form, that brings up a dialog, where we can paste the item[tab]Qty. I also made him create an estimator to show how long it would take to create the 100 lines.

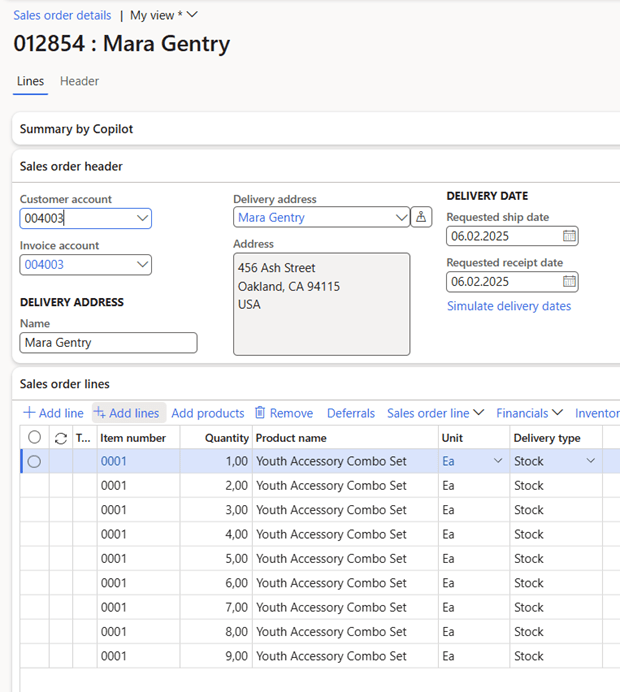

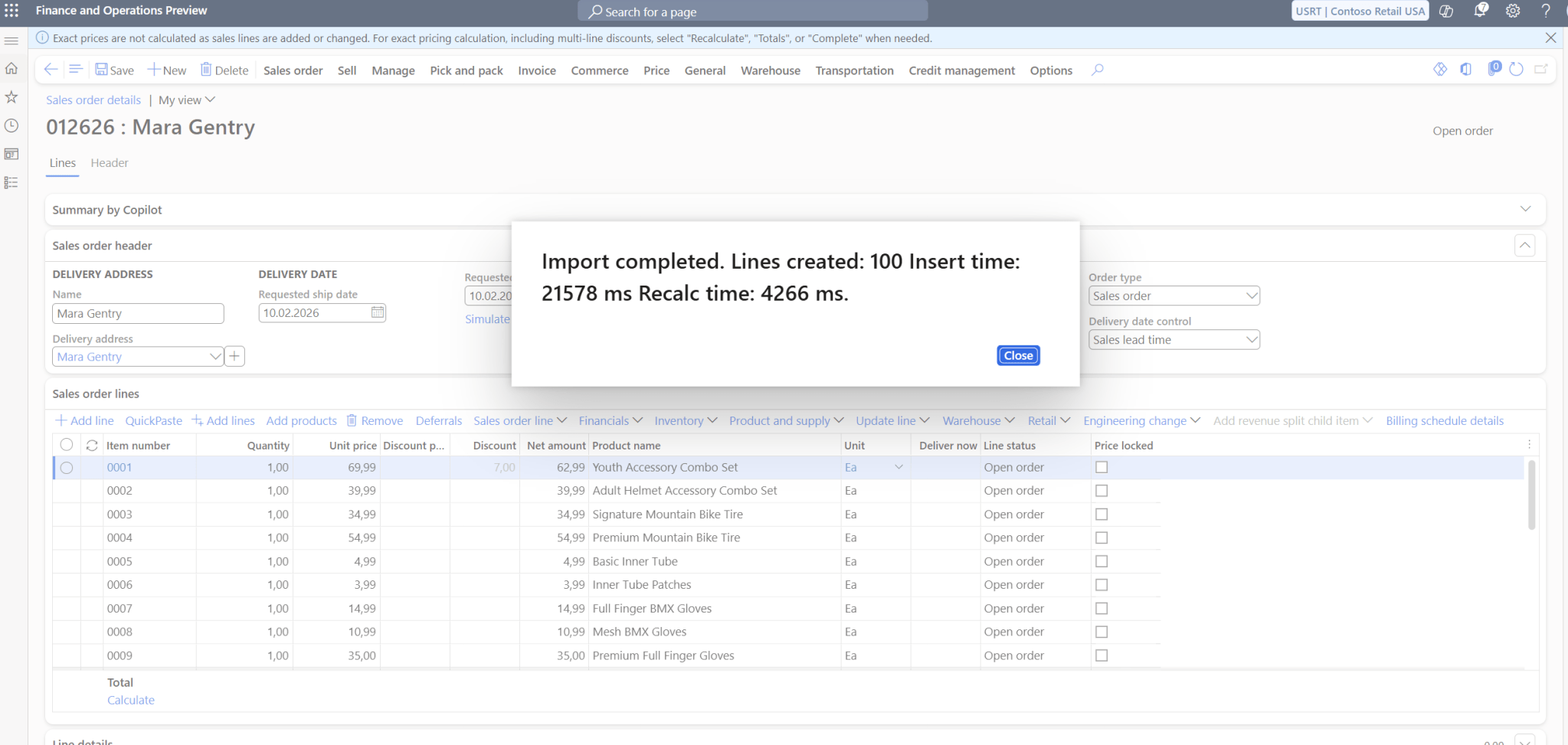

Next, when clicking “OK”, and 25s later, I have a sales order with 100 lines:

Nice 🙂 245ms in a UDE sandbox is not bad. In a production system, I hope to see it even further down towards 100ms per sales line.

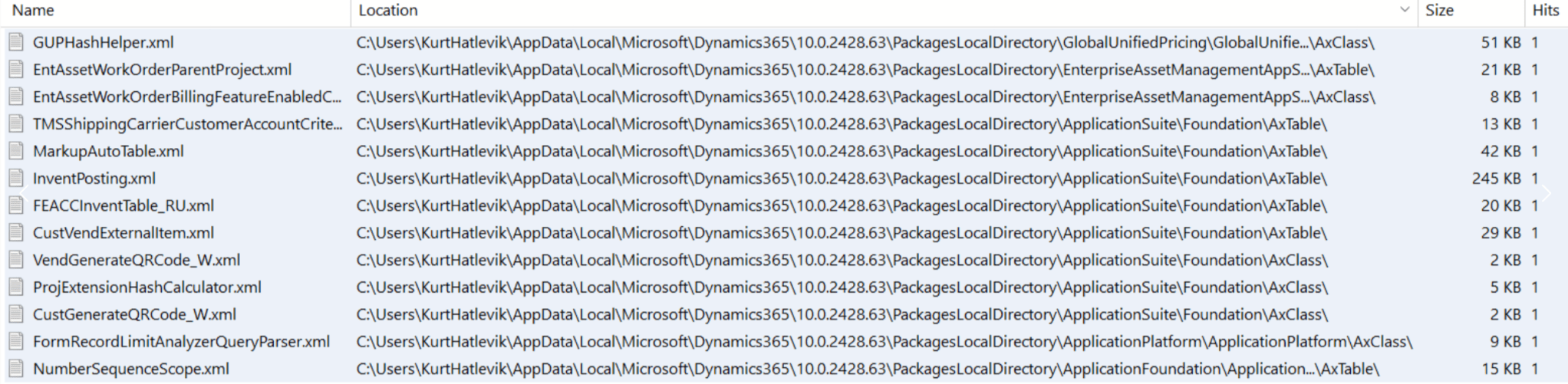

As this is “Vibe coding” the code is not production ready, and should be considered as a alfa preview. There are tonns of possibilities to improve this, and is someone created a Github project on it, we could create something for those that hate waiting for copy/paste.

Here are the code for this demo:

/// Action menu item: Class = SalesOrderQuickPasteLines

/// Paste rows: TAB-separated (Excel). Extra columns ignored.

///

/// Formats:

/// 1) ItemId

/// 2) ItemIdQty

/// 3) ItemIdQty{PriceOrUnit}

/// 4) ItemIdQty{PriceOrUnit}{PriceOrUnit}

///

/// Rules:

/// - Qty defaults to 1 if omitted/invalid.

/// - Price vs Unit ambiguity (col3/col4):

/// * If value matches a Unit for the item => treat as SalesUnit

/// * Else if numeric => treat as Price

/// * Else ignore

/// - If both are supplied across col3/col4, both are applied.

/// - >4 columns ignored.

///

/// Behavior:

/// - Insert in ONE TTS; abort on first failing row (ttsAbort + row/why).

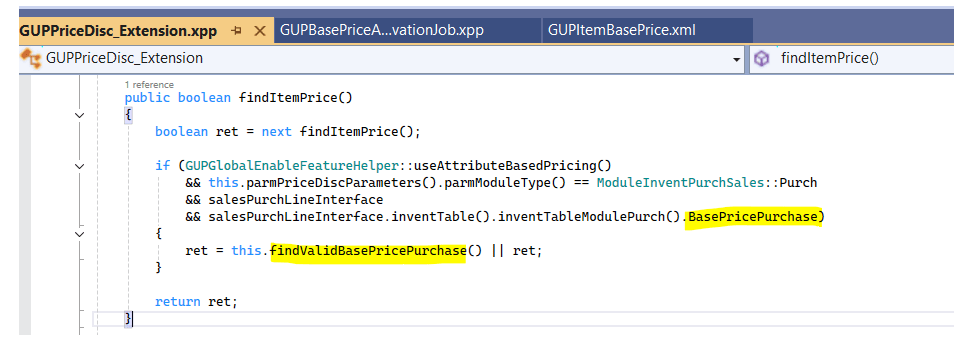

/// - st.GUPDelayPricingCalculation=Yes during insert TTS; restored before commit.

/// - sl.SkipCreateMarkup=Yes on inserted lines; cleared OUTSIDE TTS before retail recalc.

/// - Retail recalc OUTSIDE TTS: MCRSalesTableController::recalculateRetailPricesDiscounts(st)

/// - Refresh SalesLine grid via caller datasource executeQuery.

///

/// UI:

/// - Single-column dialog layout (no side-by-side groups)

/// - Instructions + Estimate as static text (no boxes)

/// - Large multiline Notes paste box

/// - Estimate updates immediately on paste/typing

class SalesOrderQuickPasteLines extends RunBase

{

#define.ProgressEvery(20)

#define.MaxErr(4000)

#define.MsPerLine(250)

#define.AggregateSameItemId(false)

SalesId salesId;

FormRun callerFr;

str pasteText;

DialogGroup gIntro, gLines;

// Static texts (no boxes)

DialogText dtHelp, dtEst;

// Paste field

DialogField dfLines;

// Controls we touch at runtime

FormStringControl cLines;

public static void main(Args _a)

{

SalesTable st = _a ? _a.record() : null;

if (!st || st.TableId != tableNum(SalesTable))

throw error("Run from SalesTable.");

SalesOrderQuickPasteLines o = new SalesOrderQuickPasteLines();

o.parmSalesId(st.SalesId);

o.parmCaller(_a ? _a.caller() as FormRun : null);

if (o.prompt())

o.runOperation();

}

public boolean canRunInNewSession()

{

return false;

}

public SalesId parmSalesId(SalesId _v = salesId)

{

salesId = _v;

return salesId;

}

public FormRun parmCaller(FormRun _v = callerFr)

{

callerFr = _v;

return callerFr;

}

// ---------------- Dialog ----------------

public Object dialog()

{

Dialog d = super();

d.caption("Paste lines (ItemIdQty)");

gIntro = d.addGroup("Instructions");

gIntro.columns(1);

dtHelp = d.addText(

"Paste TAB-separated rows from Excel:\n"

+ " ItemId\n"

+ " ItemIdQty\n"

+ " ItemIdQtyPriceOrUnit\n"

+ " ItemIdQtyPriceOrUnitPriceOrUnit\n"

+ "Col3/Col4: if it matches a Unit for the item -> Unit; else if numeric -> Price; else ignored."

);

dtEst = d.addText("Lines: 0 | Est. time: 0 s (250 ms/line)");

// Critical: nested group prevents two-column layout

gLines = d.addGroup("Lines", gIntro);

gLines.columns(1);

// Notes + ignore EDT constraints to avoid truncation

dfLines = d.addField(extendedTypeStr(Notes), "Paste here", "", true);

dfLines.value("");

dfLines.displayLength(200);

dfLines.displayHeight(28);

return d;

}

public void dialogPostRun(DialogRunbase _d)

{

super(_d);

cLines = dfLines.control() as FormStringControl;

if (cLines)

{

// Update estimate immediately on paste/typing (not only on focus leave)

cLines.registerOverrideMethod(

methodStr(FormStringControl, textChange),

methodStr(SalesOrderQuickPasteLines, lines_textChange),

this);

}

this.updateEstimate(cLines ? cLines.text() : "");

}

public void lines_textChange(FormStringControl _ctrl)

{

this.updateEstimate(_ctrl ? _ctrl.text() : "");

}

public boolean getFromDialog()

{

boolean ok = super();

pasteText = strLRTrim(dfLines.value());

return ok;

}

// ---------------- Execution ----------------

public void run()

{

if (!pasteText)

return;

// parse returns [lineNo,itemId,qty,c3,c4]

List rows = this.parse(pasteText);

int inputCount = rows.elements();

if (!inputCount)

throw error("No valid rows. Expected at least ItemId per line.");

int64 t0 = WinAPIServer::getTickCount();

int created = this.insertLinesInOneTts(rows, inputCount);

int64 insertMs = WinAPIServer::getTickCount() - t0;

int64 t1 = WinAPIServer::getTickCount();

this.postCommitRetailRecalc();

int64 recalcMs = WinAPIServer::getTickCount() - t1;

this.refreshSalesLineDs();

Box::info(

strFmt("Import completed.\nLines created: %1\nInsert time: %2 ms\nRecalc time: %3 ms.",

created, insertMs, recalcMs),

"QuickPaste");

}

// ---------------- Live ETA ----------------

private void updateEstimate(str _text)

{

int n = this.estimateLineCount(_text);

int64 ms = n * #MsPerLine;

int sec = any2int((ms + 999) / 1000);

str s = strFmt("Lines: %1 | Est. time: %2 s (%3 ms/line)", n, sec, #MsPerLine);

if (dtEst)

dtEst.text(s);

}

private int estimateLineCount(str _text)

{

if (!_text)

return 0;

_text = strReplace(_text, "\r", "");

List raw = Global::strSplit(_text, "\n");

ListEnumerator e = raw.getEnumerator();

int n = 0;

while (e.moveNext())

{

if (strLRTrim(e.current()))

n++;

}

return n;

}

// ---------------- Unit/Price helpers ----------------

private boolean tryParseReal(str _s, real _out)

{

_s = strLRTrim(_s);

if (!_s)

{

_out = 0.0;

return false;

}

try

{

_out = any2real(_s);

return true;

}

catch

{

_out = 0.0;

return false;

}

}

private boolean unitExistsForItem(InventTable _it, SalesUnit _unit)

{

if (!_unit)

return false;

if (_it && _it.salesUnitId() == _unit)

return true;

try

{

if (UnitOfMeasure::findBySymbol(_unit).RecId)

return true;

}

catch

{

}

return false;

}

// ---------------- Parsing ----------------

// Returns containers: [lineNo, itemId, qty, c3, c4]

private List parse(str _in)

{

List lines = new List(Types::Container);

if (!_in)

return lines;

_in = strReplace(_in, "\r", "");

List raw = Global::strSplit(_in, "\n");

ListEnumerator e = raw.getEnumerator();

int ln = 0;

while (e.moveNext())

{

ln++;

str row = strLRTrim(e.current());

if (!row)

continue;

List cols = Global::strSplit(row, "\t");

ListEnumerator ce = cols.getEnumerator();

int col = 0;

str itemStr = "", qtyStr = "", c3 = "", c4 = "";

while (ce.moveNext())

{

col++;

str v = strLRTrim(ce.current());

if (col == 1) itemStr = v;

else if (col == 2) qtyStr = v;

else if (col == 3) c3 = v;

else if (col == 4) c4 = v;

else break;

}

if (!itemStr)

continue;

ItemId itemId = itemStr;

Qty qty = 1;

if (qtyStr)

{

real rQty;

if (this.tryParseReal(qtyStr, rQty) && rQty > 0)

qty = rQty;

}

if (qty InventTable

// Resolve to: [lineNo,itemId,qty,price,SalesUnit]

List work = new List(Types::Container);

ListEnumerator pe = _rows.getEnumerator();

while (pe.moveNext())

{

container pc = pe.current();

int lineNo = conPeek(pc, 1);

ItemId itemId = conPeek(pc, 2);

Qty qty = conPeek(pc, 3);

str c3 = conPeek(pc, 4);

str c4 = conPeek(pc, 5);

InventTable it;

if (inv.exists(itemId))

it = inv.lookup(itemId);

else

{

it = InventTable::find(itemId, true);

if (!it.RecId)

throw error(this.err(lineNo, itemId, qty, "Item does not exist."));

inv.insert(itemId, it);

}

Price price = 0;

SalesUnit unit = "";

real r;

if (c3)

{

SalesUnit u3 = c3;

if (this.unitExistsForItem(it, u3))

unit = u3;

else if (this.tryParseReal(c3, r))

price = r;

}

if (c4)

{

SalesUnit u4 = c4;

if (!unit && this.unitExistsForItem(it, u4))

unit = u4;

else if (!price && this.tryParseReal(c4, r))

price = r;

}

work.addEnd([lineNo, itemId, qty, price, unit]);

}

// Optional aggregation by item+unit+price only

if (#AggregateSameItemId)

{

Map keyQty = new Map(Types::String, Types::Real);

Map keyLine = new Map(Types::String, Types::Integer);

ListEnumerator ae = work.getEnumerator();

while (ae.moveNext())

{

container c = ae.current();

int lineNo = conPeek(c, 1);

ItemId itemId = conPeek(c, 2);

Qty qty = conPeek(c, 3);

Price price = conPeek(c, 4);

SalesUnit unit = conPeek(c, 5);

str key = strFmt("%1|%2|%3", itemId, unit, price);

if (!keyLine.exists(key))

keyLine.insert(key, lineNo);

real prev = keyQty.exists(key) ? keyQty.lookup(key) : 0.0;

keyQty.insert(key, prev + qty);

}

work = new List(Types::Container);

MapEnumerator me = keyQty.getEnumerator();

while (me.moveNext())

{

str key = me.currentKey();

real qtySum = me.currentValue();

List parts = Global::strSplit(key, "|");

ListEnumerator le = parts.getEnumerator();

ItemId itemId; SalesUnit unit; Price price;

int idx = 0;

while (le.moveNext())

{

idx++;

str v = le.current();

if (idx == 1) itemId = v;

else if (idx == 2) unit = v;

else if (idx == 3) price = any2real(v);

}

int lineNo = keyLine.lookup(key);

work.addEnd([lineNo, itemId, qtySum, price, unit]);

}

}

int progressCounter = 0;

int64 t0 = WinAPIServer::getTickCount();

int workTotal = work.elements();

ttsBegin;

try

{

origDelay = st.GUPDelayPricingCalculation;

st.GUPDelayPricingCalculation = NoYes::Yes;

st.doUpdate();

ListEnumerator e2 = work.getEnumerator();

while (e2.moveNext())

{

container c2 = e2.current();

int lineNo = conPeek(c2, 1);

ItemId itemId = conPeek(c2, 2);

Qty qty = conPeek(c2, 3);

Price price = conPeek(c2, 4);

SalesUnit unit = conPeek(c2, 5);

InventTable it = inv.lookup(itemId);

this.createFast(st, it, qty, price, unit, lineNo);

created++;

progressCounter++;

if (progressCounter >= #ProgressEvery || created == workTotal)

{

progressCounter = 0;

int64 elapsed = WinAPIServer::getTickCount() - t0;

real avgMs = created ? (elapsed / created) : 0;

real remMs = avgMs * (workTotal - created);

p.setText(strFmt("Created %1/%2. Est. remaining: %3 s",

created, workTotal, any2int(remMs / 1000)));

}

p.incCount(1);

}

st = SalesTable::find(this.salesId, true);

st.GUPDelayPricingCalculation = origDelay;

st.doUpdate();

ttsCommit;

}

catch (Exception::Error)

{

ttsAbort;

throw;

}

return created;

}

private void createFast(SalesTable _st, InventTable _it, Qty _qty, Price _price, SalesUnit _unit, int _lineNo)

{

try

{

SalesLine sl;

sl.clear();

sl.initValue();

sl.SalesId = _st.SalesId;

sl.ItemId = _it.ItemId;

sl.SalesQty = _qty;

sl.SalesUnit = _unit ? _unit : _it.salesUnitId();

if (_price && _price > 0)

{

sl.SalesPrice = _price;

sl.PriceUnit = 1;

}

sl.SkipCreateMarkup = NoYes::Yes;

sl.createLine(false, true, true, false, false, false);

}

catch (Exception::Error)

{

throw error(this.err(_lineNo, _it.ItemId, _qty, this.lastInfo(#MaxErr)));

}

}

// ---------------- Post-commit retail recalc OUTSIDE TTS ----------------

private void postCommitRetailRecalc()

{

SalesTable st = SalesTable::find(this.salesId, true);

SalesLine salesLine;

update_recordset salesLine

setting SkipCreateMarkup = NoYes::No

where salesLine.SalesId == st.SalesId

&& salesLine.SkipCreateMarkup == NoYes::Yes;

MCRSalesTableController::recalculateRetailPricesDiscounts(st);

}

private void refreshSalesLineDs()

{

if (!callerFr)

return;

FormDataSource ds = callerFr.dataSource(formDataSourceStr(SalesTable, SalesLine));

if (ds)

ds.executeQuery();

}

private str err(int _n, ItemId _i, Qty _q, str _r)

{

_r = strLRTrim(_r);

if (!_r)

_r = "Unknown error.";

return strFmt("Import failed. Input line %1 (ItemId=%2, Qty=%3). Reason: %4", _n, _i, _q, _r);

}

private str lastInfo(int _max)

{

str t = "";

try

{

for (int i = infolog.num(); i > 0 && strLen(t) _max)

t = substr(t, 1, _max);

return t;

}

}