What I want to showcase in this blogpost is how to use results from the trace parser to analyze deeper, and see where the application is spending time, and to identify where there are optimizations in the application.

As the application works as designed, there is no point in creating support cases towards this, as these findings are not bugs but optimalization opportunities, and how we from a statistical analysis can extract knowledge and identify R&D investment areas.

My own conclusion on how to improve the paste-to-grid performance is:

- Reduction in number of DBcalls needed to create a sales order line. This involves reevaluating code and approach. Saving milliseconds on high frequency methods and reducing DB chattiness means saving mega seconds in the overall timing.

- Additional improvements are needed in the Global Unified Pricing code to further optimize calls and reduce the number of DB calls performed. Also explore the possibility to reduce the need for TempDB joins and replace with SQL “IN” support in X++.

- Establish a goal/KPI for sales order line insert when copy/pasting into grid, where the acceptance criteria on a vanilla Contoso environment is minimum 1s per line.

Setup of the analysis

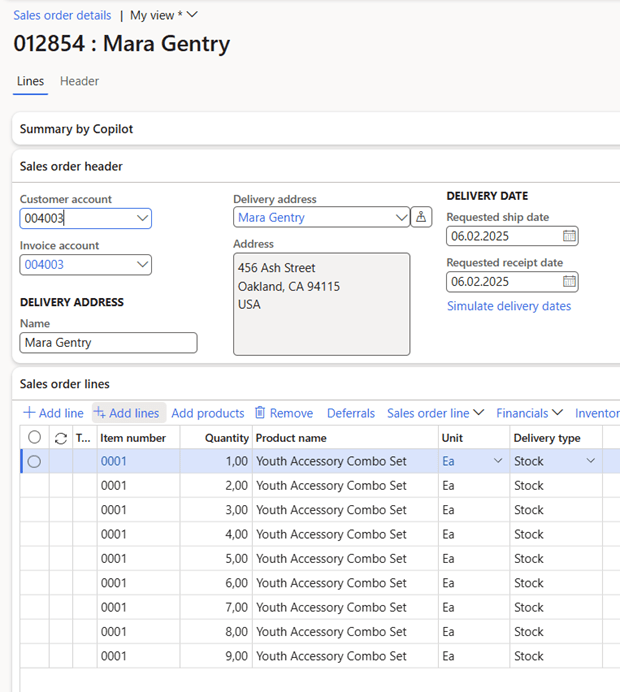

The following analysis has been performed on Dynamics 365 SCM version 10.0.43 on a Tier-2 environment, and the analysis is quite simple to paste 9 sales order lines into the grid like this:

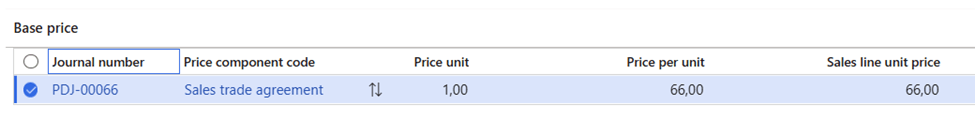

Company USRT, and the product 0001 only have one base price setting the price per unit to 66 USD:

The analysis was performed using Traceparser and then analyzing the results in Excel.

Some overall facts

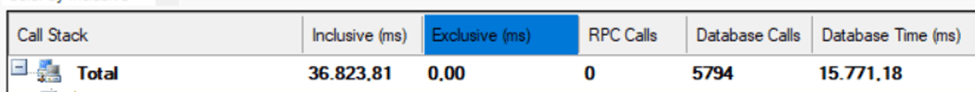

To create 9 sales order lines took 36.823 ms of execution in total, where there were 5.794 database calls that took 15.771 ms. In total there were 655.025 X++ entries/method calls in the trace. The average execution time for a SQL statement was 2,72 ms, and the average X++ methods execution time(exclusive) was 0,0321 ms. The average time per sales order line is in total 4.091ms

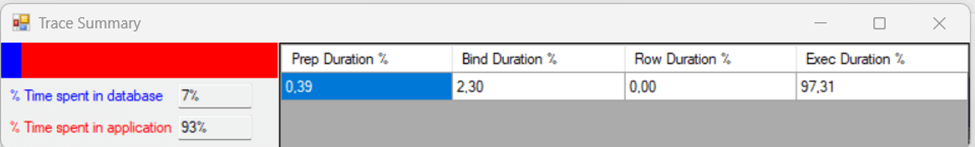

The Trace Summary indicates that 7% of the time was spent in the DB, and 93% was spent in the application(x++).

This indicates that for this analysis, that the DB and caching is fast and warm.

Analysis of code call frequency

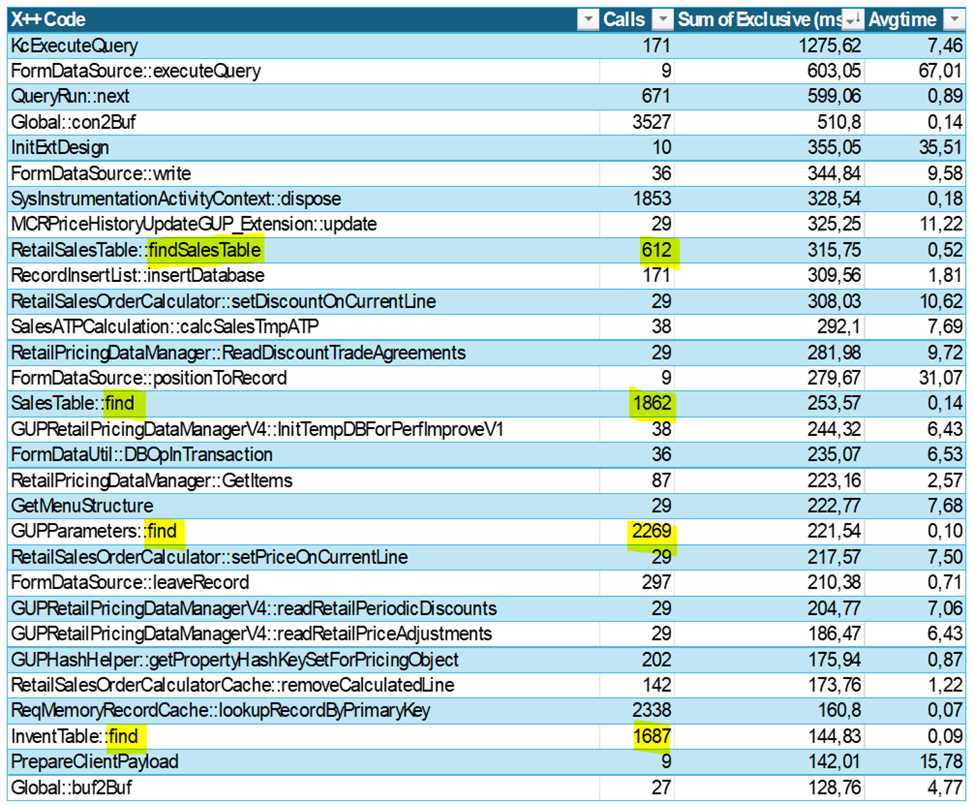

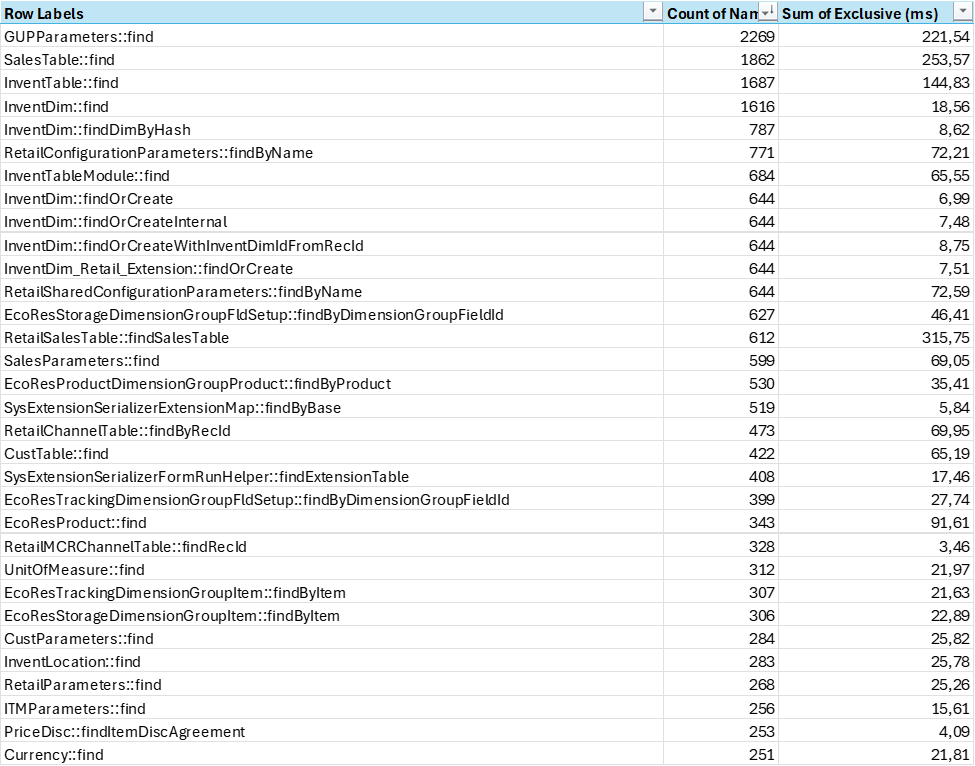

Call frequency indicates how many times the same code is called. If we look at the top 30 methods called, these account for 44% of the X++ execution time.

We can observe that the method Global::con2Buf is called 3.527 times. The main source of why this have a high frequency is associated with the SysExtensionSerializedMap that is related to normalization instead of extending tables with lot of fields.

One interesting observation is that if we see that the average execution time for methods containing the string “Find”, this is 29.624 calls(4,5% of total), and sum execution time accounts for 3.101 ms (14,7% of total). As the number of calls to find() is so high, there are indications that there are an overhead of data transferred to maybe just find a single a value. Reusing table variables within an execution could reduce time spent on finding a record (even though we are hitting the cache).

Global Unified pricing and tempDB

If we look at code that is related to the most time-consuming part of sales order line creation it is related to price calculation. In total there are 36.564 calls (5,58% of total) towards methods associated with GUP, and this accounts for 2.844 ms (13,5% of total).

I see some improvements areas where there are high frequency calls, that could benefit from additional caching and keeping variables in scope. If there were possibilities to keep variable values across TTS scopes, there could be some additional savings.

If we look at the SQL calls that is related to Global Unified Pricing there are in total 1.138 calls (19,64% of total calls), and the DB execution time is 8.791 ms (55,74 % of total db execution time)

The costliest calls (75ms each) are being made in GUPRetailPricingDataManagerV3::readPriceingAutoChargesLines() and are related to finding price componentCode, and match these with auto charges and attributes on the products. The use of tempDB tables in these joins is causing some delays as the hash values need to be inserted into a temporary table, and after exiting each TTS scope they need to be recreated again.

The use of tempDB for joins is something that have been increasingly used through the codebase the last few years. If we look at the total number of SQL calls, involving tempDB, there are 1184 SQL statements( 20,61% of all) involving tempDB. Time spent on executing statements involving tempDB joins is 10.396,87 ms (65% of total)

A 75 ms execution time for a query with this level of JOIN complexity is not unusual. It is unlikely that the temporary tables are the primary culprit. Instead, the overall join strategy and how the optimizer handles the multiple cross joins and filtering conditions are more likely to be the factors influencing the execution time. But if able to avoid tempDB, there could be an additional 5-15% saving in execution time by using SQL “IN” or “NOT IN” statements. But as X++ currently do not support SQL “IN” in X++, I guess the approach would be to restructure the statement, ensuring that the smallest tables are earlier in the statements.

Feature management

On interesting fact observed is that we see checks and validations towards feature and flights to have an effect on the performance. In total, there are 19.561 method calls in the trace related to feature checks. The checks are fast, but the frequency is very high. This tells me that the code paths and feature management is very tightly integrated, and feature states highly influences execution paths.

A small fun fact, is that there are quite a few feature checks calls towards deprecated features, like the Scale unit capability for Supply Chain Management that was deprecated a few years ago.

Last note

In the world of performance tuning, tiny tweaks in code that runs thousands of times can add up to massive gains—think of it as the compound interest of optimization. A few milliseconds shaved off here and there may seem insignificant on their own, but they can quickly turn into a tidal wave of savings. So, remember: when it comes to high-frequency calls, every little improvement can make a big splash! And if you still try to find the needle in the haystack for the single code that improves everything, remember that there are no haystack; Only needles.