When I observe the use of Dynamics 365, I often see that most often there are well-established processes and routines for getting data into the Dynamics 365 system. But using this data is often limited to retrieving financial reports that show everything in dollars and cents. However, the information contained in the system is often of high value, but to effectively use of the data has not been implemented. The reason for this is often simple; One does not know how. And often it can end up in overcomplicated enterprise scale solution that costs much more than needed.

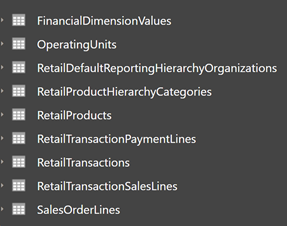

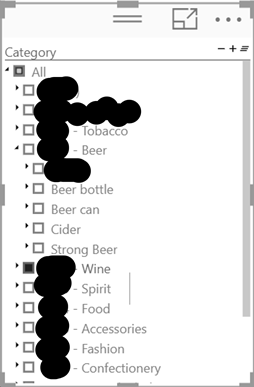

Here is a small list of what is standard, and quite quickly can expose the data to reporting, like Power BI and Excel

- Data export

This is the easy way, where you select data entities to be exported as Excel sheets, CSV or XML. Manual, simple and requires very little demanding in setting up. -

ODATA

Odata is also a very simple and easy way to get access to Dynamics 365 F&O data, and can be consumed directly in Power BI. But it is slow compared to the other ways, and I don’t recommend using this to transactional data. Use of OData for Power BI reports is discouraged. Using entity store for such scenarios is encouraged. - BYOD – Bring your own database

In Dynamics 365, you can set up an Azure SQL database, as a destination when exporting data. Power BI can then read directly from this database. This makes it easy to access the data. But an Azure SQL database can be expensive, and in the long run this way of exporting data will probably become less common. Data Lake will be taking over more for this form of exposing data. -

Entity stores are analytic cubes that are already in place in the standard solution. When you go into the different work areas, there are already many Power BI embedded analyzes that can be used directly. But the very few are aware is that these cubes can be made available in a Data Lake, so they can be used in reports that you create yourself. Dynamics 365 updates data lake continuously and there is a short delay until the data is available in Data Lake (trickle feed). I’m a but surprised that very few customers are using this option to create additional Power BI reports, and even to be able to open the data flows directly in excel. You literally can just select your dimensions and measurements directly from the entity store data lake. Why are almost nobody using this standard feature?

-

Dataverse og dual write

Dual Write is a built-in solution, where the data in Dynamics 365 is synchronously updated between the various apps. Typically, this is used to have shared registers between “customer engagement apps” and “Finance and Operations apps”. But in reality, you can use the entities you want. - Virtual entities

With virtual entities, the data is in Dynamics 365 Finance and Operations, but they are exposed as entities in Data Verse. (It could be that you need to use the legacy connector to access virtual entities in Power BI) -

This is the solution that will really give the data value in the future. In a future release, it will be easier to set up which tables and entities are to be written in Data Lake in almost real time. But it is not only the data that is written, but also metadata that can describe the information and relationships. So keep an eye on the roadmap on this.

These ways of exposing data can be set up as data flows that can be subscribed to. Not just Power BI but also Excel or other services that need this data. In Power BI you can subscribe to several data sources, so you can build the visualization and analysis that is desired.

Then comes the big question; Is it a lot of work to set up? What you may not be aware of is that a lot of this is already part of Dynamics 365. It requires very few hours to set up, and Power BI is also something that is relatively easy to use.

One exciting area that comes in the wake of this is to link this to MachineLearning / AI directly from Power BI. So that the system can build up prediction models, which see the connection between the data and which come with predictions. Dynamics 365 Finance comes full of solutions that give good indications of when customers will pay, suggestions for the next budget or how future cash holdings will be. Within trade / retail, there have been solutions for product recommendations based on customer profile and shopping cart.

The value of your data is determined by how you use it, and the first step is to make it available for use.