First, I want to say that Microsoft Dynamics 365 for Retail is the best retail system in the world. What we can do is just amazing! This blog post is going to be a mess without meaningful structure, because the purpose of this post is to quickly give 911-help to retailers, so that the they can continue their daily operations. I this blog post is primary focusing on the MPOS(Modern POS) with offline database and when having a local RSSU(Retail Store Scale Unit). Also, this blog post will be incrementally changed and new topics will be added. So please be welcome to revisit later.

MPOS Hardware

Microsoft do not give recommendations on hardware, but they have tested some hardware. I also can share what is working for a scenario where an offline database on the MPOS should be installed.

HP RP9 G1 AiO Retail System, Model 9018

◾ Microsoft Windows 10 enterprise 64-bit OS – LTSB

◾ HP RP9 Integrated Bar Code Scanner (as a secondary mounted scanner)

◾ 128GB M.2 SATA 3D SSD

◾ 16 Gb Ram

◾ Intel Core i5-6500TE 3.3 6M 2133 4C CPU

◾ HP RP9 Integrated Dual-Head MSR -Right (For log-on card reading)

◾ HP L7014 14-inch Retail Monitor-Europe (for dual display)

◾ HP LAN THERMAL RECEIPT PRINTER-EUROPE – ENGLISH LOCALIZATION (TC_POS_TERMALPRINT_BTO)

A small tip; OPOS devices are slow and unpredictable. Try to avoid them. But in this hardware we still had to use OPOS for the receipt printer and the cash drawer.

All drivers related to this machine is available her.

Payment terminals

Building payment connectors is time consuming, but Microsoft have provided documentation and samples that is available her. For me, I prefer ISV solutions for this.

◾ Ingenico iPP 350 Payment terminal (Requires a ISV payment solution)

Additional Scanners

◾ SYMBOL DS9808

◾ Datalogic – Magellan 3200Vsi

Remember to open the scanner documentation, and to scan barcodes to program them to make sure to Enable Carriage Return/Line Feed, adjust beeping etc.

Generic preparation recommendations when having issues

In the following chapter is some preparation steps that you should be prepared to do.

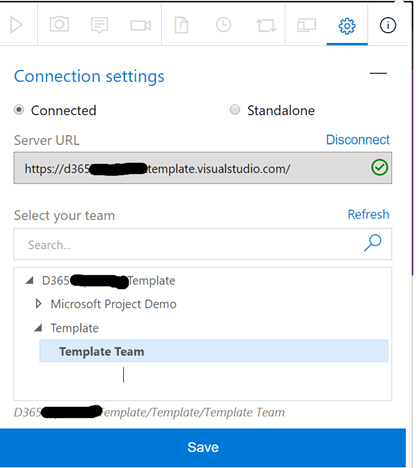

Install TeamViewer on the MPOS device

To make sure that a professional quickly can analyze the device, we always try to use or install team viewer on the RSSU and MPOS devices. This makes it possible to access the machines. Please follow security precautions when using TeamViewer.

Start collecting information

Dynamics 365 for Retail contains a comprehensive set of events that is logged in the system, and that is available for IT resources. Please check out the following pages for additional steps to troubleshoot.

https://docs.microsoft.com/en-us/dynamics365/unified-operations/retail/dev-itpro/retail-component-events-diagnostics-troubleshooting

The following section contains issues experienced with manually installing Dynamics 365 MPOS.

If you cannot figure it out quickly, create a Microsoft support request as fast as you can. Normally Microsoft responds fast and can give recommendations quite quickly, but often they will need information on the actual machine to see if there are issues related to software and hardware. MPOS and RSSU is logging a tremendous set of information that is relevant for a support case. Take pictures, screen dumps and collect data.

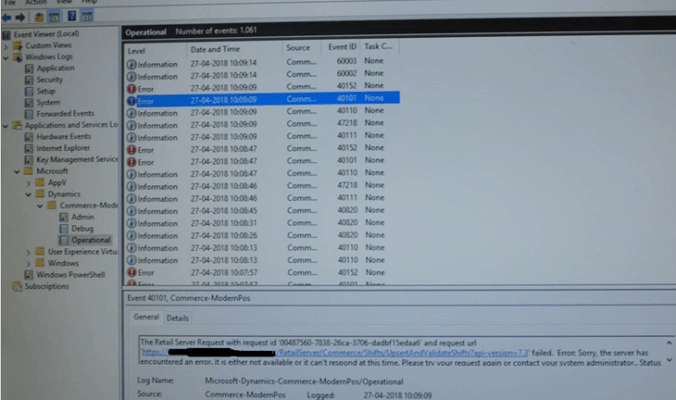

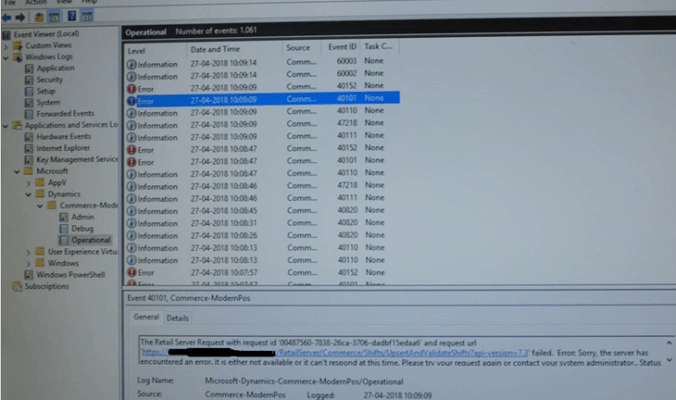

Event logs

Always look into the event logs on the MPOS and the RSSU. Also learn to export the event logs as they can give valuable information on what is wrong. The following event logs are of interest.

• Windows > Application

• Windows > Security

• Windows > System

• Application and Services Logs > MPOS/Operational

Machine information

Collect Microsoft System Information, such as devices that are installed in the MPOS or device drivers loaded, and provides a menu for displaying the associated system topic. To collect this data do

- Run a Command Prompt as an Administrator

- Execute MSINFO32.exe

- Go to Menu File > Save as machine.nfo

Backups of the local database

Take backups of the RSSU and local database, as this can be handy to analyze the data composition of the database. Some times Microsoft will ask for exact database version and information like:

-

What version of SQL is this?

Further, is this Standard, Enterprise, Express, etc.?

=> Run query select @@version and share the resulting string.

- How large is the SQL DB at this time?

- Plenty of space available on the hard drive still?

- What is the current size of the offline database and RetailChannelDatabase log file?

RSSU installation and Checklist

The setup and installation of RSSU is documented in the Microsoft DOCS https://docs.microsoft.com/en-us/dynamics365/unified-operations/retail/dev-itpro/retail-store-scale-unit-configuration-installation

- Operating system is Windows 10 Enterprise LTSB with separate disk for SQL. SSD disks is highly recommended!

- SQL Server 2016 standard edition with full text search installed locally on server.

– I would not recommend SQL Express on a RSSU with multiple MPOS’es installed.

- Install .NET 3.5 ,4.6, IIS and run Windows update before setup

- Make sure that SSL certificates(RSSU and MPOS) have been installed and setup on the machine. Remember to add them to you Azure account

- Verify that you have an Azure AD credentials that you can use to sign in to Retail headquarters.

- Verify that you have administrative or root access to install Retail Modern POS on a device.

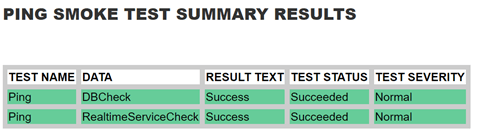

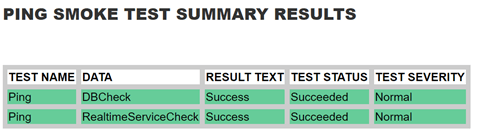

- Verify that you can access the Retail Server from the device. (like ping with https://XXX.YY.ZZ/RetailServer/healthcheck?testname=ping)

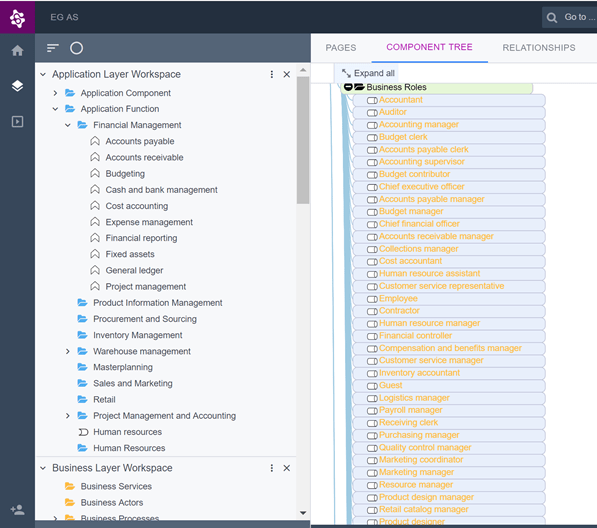

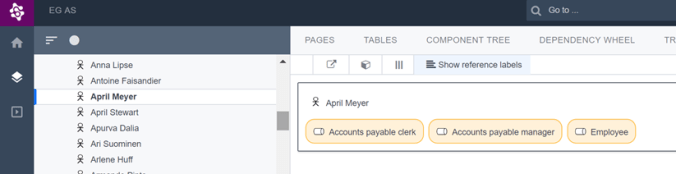

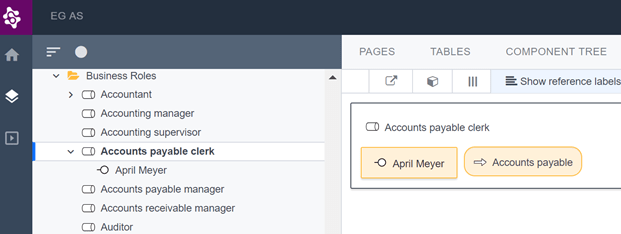

- Verify that the Microsoft Dynamics 365 for Retail, Enterprise edition, environment contains the Retail permission groups and jobs in the Human resources module. These permission groups and jobs should have been installed as part of the demo data.

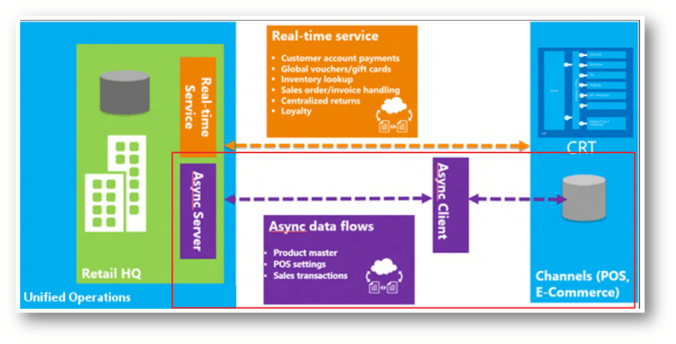

A small, but important information about the RSSU. It is designed to always have some kind of cloud connection. If it loses this connection, then strange issues starts to occur. Especially in relation to RTS calls (Realtime Service Calls)

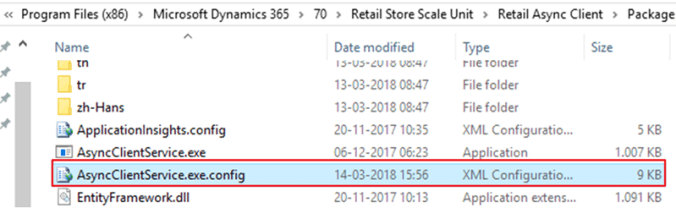

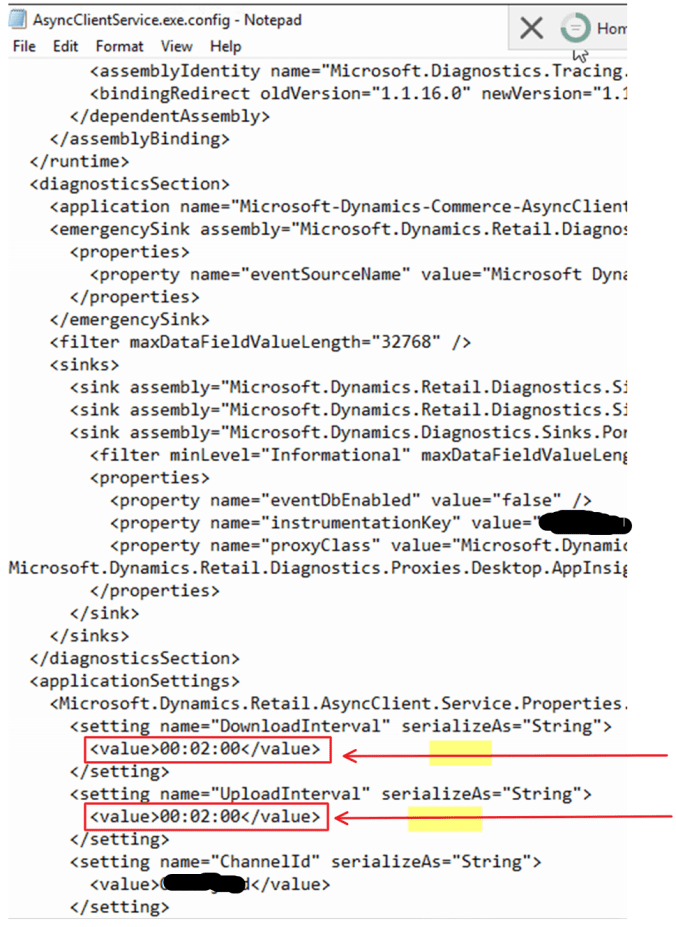

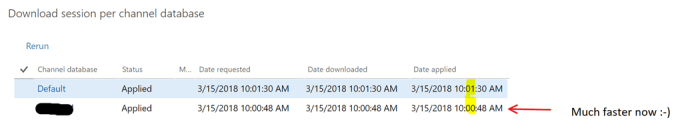

Set Async interval on RSSU

This has been described in a previous blogpost.

Installation of MPOS issues

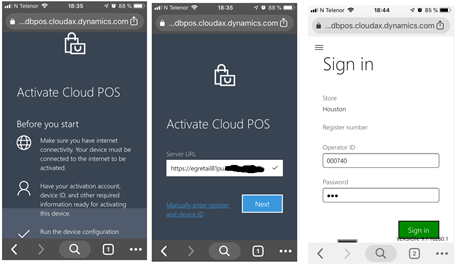

There are a number of pre-requisites that needs to be followed that is available on Microsoft DOCS. Read them very carefully and follow them to the letter. Do not assume anything unless stated in the documentation. Also read https://docs.microsoft.com/en-us/dynamics365/unified-operations/retail/dev-itpro/retail-device-activation. Here are my additional tips:

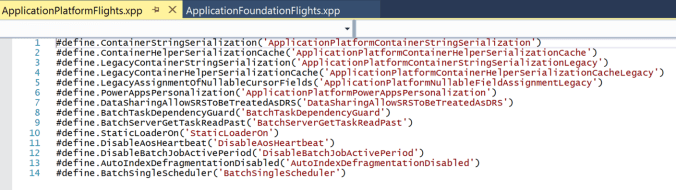

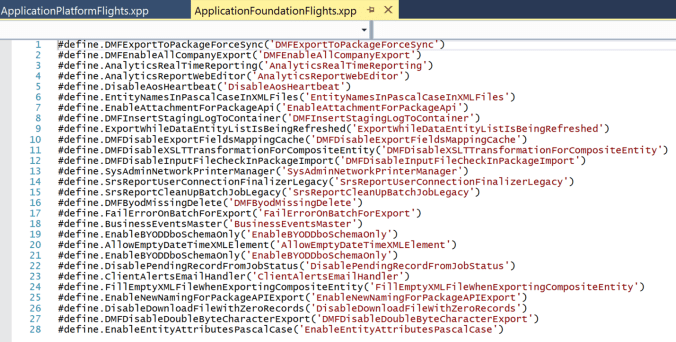

When having customizations or extensions

If you have made extensions, remember to make sure that the developer that have made the deployable package have build the package with “configuration = Release”. There are scenario’s where the MPOS installation can give issues like this.

There are scenarios where making a MPOS build with configuration = debug for internal use, please take a look at the following Microsoft blog-post.

Having the right local SQL express with the right user access on the MPOS

If you are making a retail POS image (With Windows and SQL preinstalled), please make sure to select the right SQL version(Currently SQL 2014 SP2). If SQL express is not already installed, then the MPOS installer will automatically download and install it. But the file is 1.6 Gb, and it is therefore recommended to manually install the SQL express, or have it as part of the standard image. SQL Express is available her, and select the SQLEXPRADV_x64_ENU.exe

There are ways of using SQL Express 2017 with MPOS, but I recommend to wait doing this until Microsoft officially includes this in their installer. Also remember that the SQL Express have some limitations, like it can only use 1 Gb of Ram, and have a 10 Gb database size limitation.

I recommend creating two users on a MPOS machine:

– A PosUser@XXX.YYY, that is a user with very limited rights on the machine, and customers often wants auto login to the machine using this user. But this user also needs administrator elevation when it should do administrator stuff on the machine.

– A PosInstaller@XXX.YYY, that have administrator rights on the local MPOS machine.

When installing, remember to add both the PosUser and PosInstaller as users in the SQL when installing the SQL Express, else the installer struggles to create the offline databases.

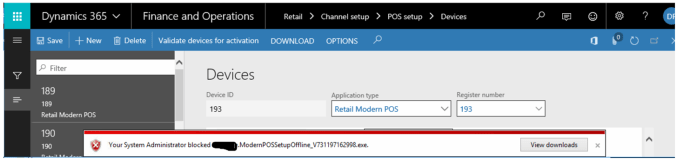

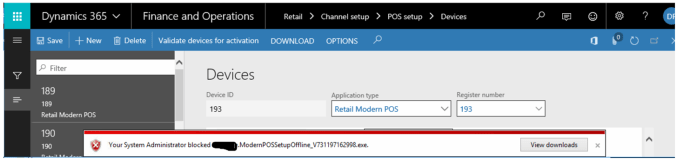

Cannot download MPOS package from Dynamics 365

If you try to manually download the installation package, windows explorer have been setup to sometimes deny this.

The reason for this could be a certificate problem with the package. The work-around for this, is to use Chrome when downloading.

Cannot install the MPOS Offline package

When installing the MPOS the following error may come. In many cases the user must be leveraged to administrator. If you receive the following error, it means that the version you are installing is older than the existing version, and the current version must be uninstalled first. Do not try to install a higher version than is deployed in your Cloud RSSU default database, as this is not supported. Also if you need to “down-grade” a MPOS, then uninstall the MPOS first, and then reinstall the older release.

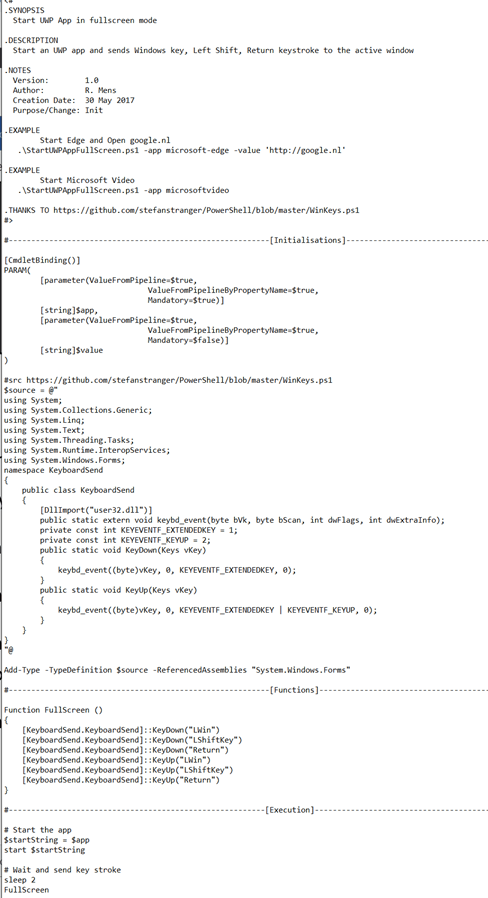

PowerShell scripts for manual uninstalling of MPOS

In 95% of any situation, just uninstalling the MPOS app should work. But if you are out of options, Microsoft have created an uninstall powershell script.

Cd “C:\Program Files (x86)\Microsoft Dynamics 365\70\Retail Modern POS\Tools”

Uninstall-RetailModernPOS.ps1

I often experience that we need to run the uninstall in the following sequence:

1. Run it as a local administrator

2. Then a “uninstall” icon appears on the desktop, that we need to click on

3. Run it again as a local administrator

Then the MPOS is gone, and you can reinstall the correct MPOS.

Connectivity issues

Here are some tips on connectivity issues, and how to solve them.

MPOS is slow to log in

When starting the MPOS, it sometimes can use a few seconds before available. We see this, it you typical have a slow internet connection with high latency. The MPOS is doing some stuff towards the cloud, and this just takes time.

MPOS cannot go online after being offline

I think this behavior currently is some bug that can happen in certain situations and if the RSSU looses internet connectivity. Microsoft are investigating the causes. If not possible to go online after the MPOS have been in offline, it is possible to reactivate the MPOS to get online. In the event log you may see issues like this : “UpsertAndValidateShifts”

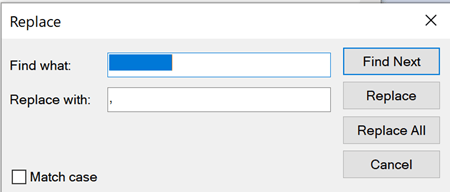

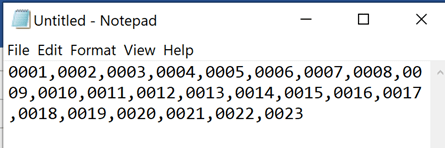

Rename the file: C:\Users\[POS-User]\AppData\Local\Packages\Microsoft.Dynamics.Retail.Pos_tfm0as0j1fqzt\AC\Microsoft\Internet Explorer\DOMStore\DSSWV5L9\microsoft.dynamics.retail[1].xml

Then reactivate the MPOS with RSSU address, register and device and login with the D365.posinstaller.

IMPORTATANT: Remember to select hardware station when logging into the MPOS afterwards!

This is not a supported “fix” from Microsoft, and it is expected that Microsoft will find a permanent solution to this issue.

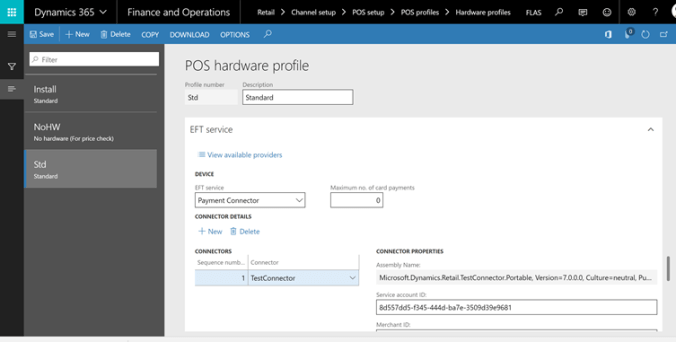

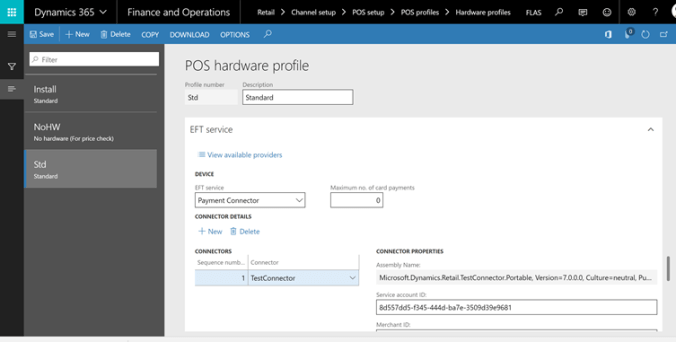

MPOS cannot connect with the payment connector

The following is mainly related to some issues that could be happening if having a third party payment connector using PINPAD. In most generic cases this is not relevant for those that is using standard or other payment connectors.

1. First check that Hardware station is selected on the MPOS.

2. The next step is to reboot the PC

3. If still not working, copy the file MerchantInformation.xml to the folder “C:\ProgramData\Microsoft Dynamics AX\Retail Hardware Station”. AND to C:\Users\[POS-User]\AppData\Local\Microsoft Dynamics AX\Retail Hardware Station. This will ensure that the payment is working as expected also in offline mode. The MerchantInformation.xml is a file that is downloaded from the cloud the first time the POS is started. If changing the hardware profile

4. Is still not working, open the hardware profile and in the profile ” set the EFT Service to Payment connector and test connector. This will download the MerchantInformation.xml again.

Then run the 1090 distribution job. After X minutes, try to restart the MPOS, and try to perform a payment. This should also automatically regenerate the MerchantInformation.xml. Microsoft is working on a fix for this, and you can follow the issue her.

PS! Normally a production environment should not need to have connection to the Microsoft test connector

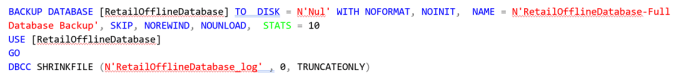

Retail offline database exceeds 10 Gb limit

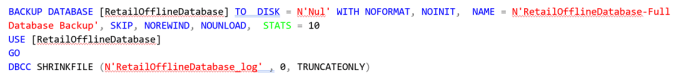

To ensure that a POS don’t exceed the SQL Express 10 Gb disk restrictions, I have created a SQL script that reduces size of the log file. Please evaluate to implement on all POS’es.

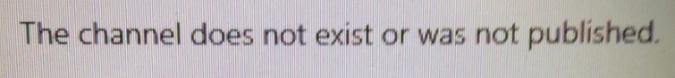

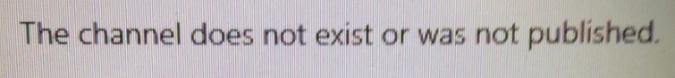

Getting strange errors like “The channel does not exist or was not published”

In some rare situations you could experience getting errors like.

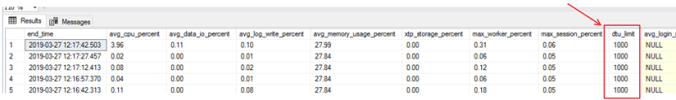

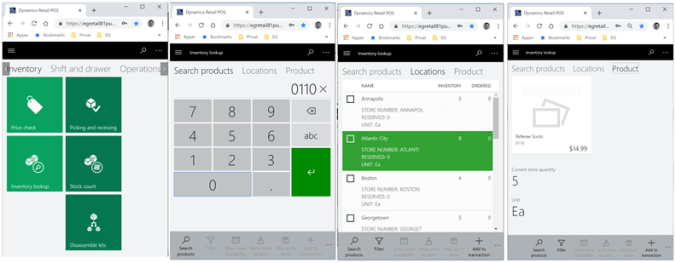

Our experience is that this could happen if the database on the RSSU is overloaded, and are not able to respond to MPOS connections. Log into the RSSU and check out if the CPU, database og disks are not able to respond. If you have SQL express on the RSSU, we have experienced this. Also try to not push to many distribution jobs too frequently. In a situation we uploaded 400.000 customers, while running the distribution job 1010 (customers) every 5 minutes. That “killed” the RSSU when having SQL express.

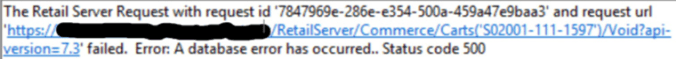

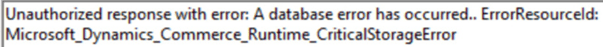

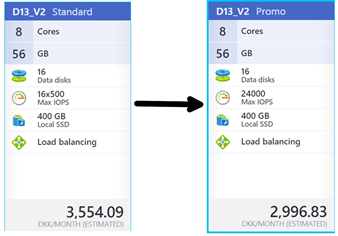

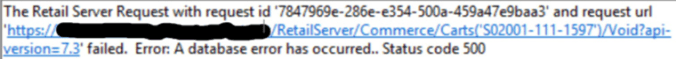

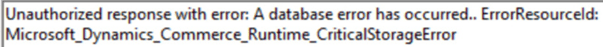

Getting strange errors like “A database error occurred”

We have also experienced this when the RSSU is overloaded. Remember that the Microsoft recommendation on the RSSU hardware needs to be scaled accordingly to hos many MPOS’es is connected and how much data and transaction volume. Get an SQL expert to evaluate the setup of the RSSU prior to go live and remember to volume test the setup.

Hot to fix ? Scale up your RSSU.

Getting strange errors like “We where unable to obtain the card payment accept page URL”

We have also experienced the following issue. The solution was simple; Remember to enable the local hardware station on the MPOS.

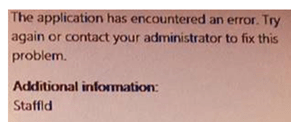

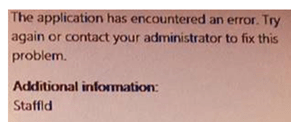

Getting strange errors like “StaffId”, when returning a transaction

In a situation where there are connection between the MPOS and the RSSU, but the RSSU don’t have a connection to the cloud, AND you perform a “return transaction”. You may get the following error.

“Return transaction” is defined as an operation that require online RTS (Real-Time-Service calls). The following list defines all POS operations, and if they are available in offline mode.

The solution in this situation is therefore to use the POS operation “Return Product” instead on the MPOS.

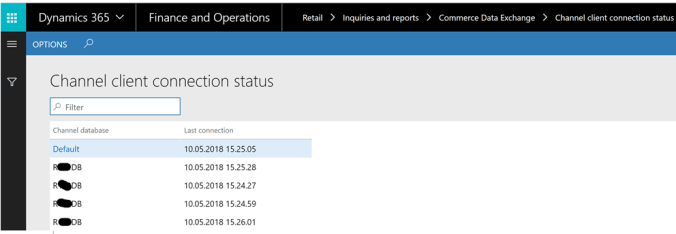

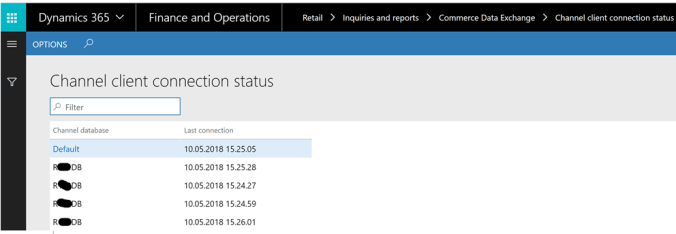

Keep and eye on your devices.

In the menu item Channel client connection status you can see last time each device was connected.

Functional issues

With functional issues I refer to issues that is related to user errors and more functional issues that can occure.

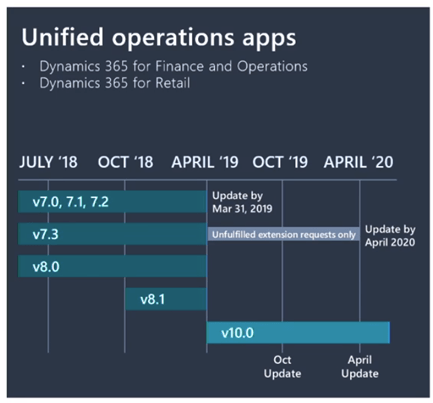

Dynamics 365 for Retail on version 8

Even though version 8 have been launched for Dynamics 365 for Finance and Operations, I have not seen that Retail yet(10 may 2018) is supported on version 8. So before going forward on version 8, please check with Microsoft support.

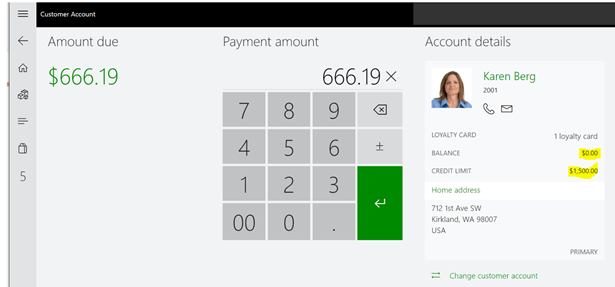

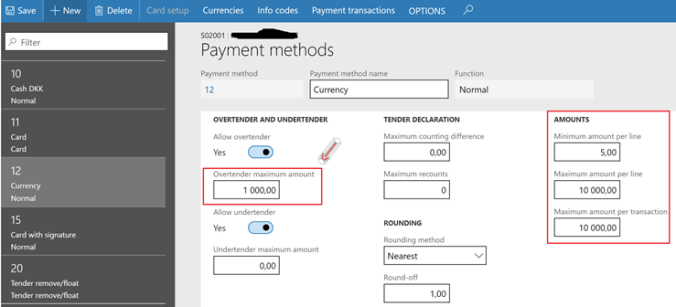

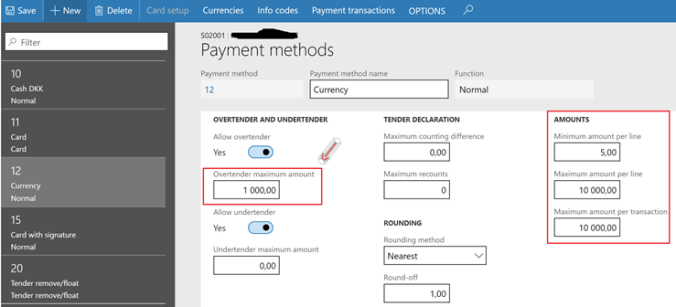

Barcode scanned as tendered currency amount

This is a funny issue, that can occur. Some background story is in place here. A customer wants to pay for the product in another currency, and the cashier selected the “pay currency” on the MPOS, ready to key in the amount that the customer is paying. But unfortunately, the cashier scanned the product barcode, and then the MPOS committed the sale as the customer had paid 7.622.100.917,80 in currency, and should have 5.707.750.079.417 in return (local currency). Lesson learned; Always remember to set the parameters “Overtender maximum amount” and the Amounts fields.

How to fix it? You actually need to create a Microsoft support request to have perform make some changes in the database. This takes time, and it have to be first performed in the staging environment that is updated. It can take a lot of time! So make sure you set these parameters right before you go live.

Cannot post retail statement, because of a rounding issue.

This is a known issue, and Microsoft have a hotfix for this. Always make sure you periodically update you system with the latest hotfixes. Here is my small tip on this; Try 4-5 time to click on post, and then it suddenly goes though and get’s posted. We do not know why ??

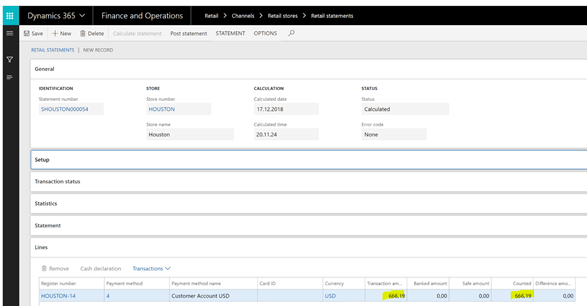

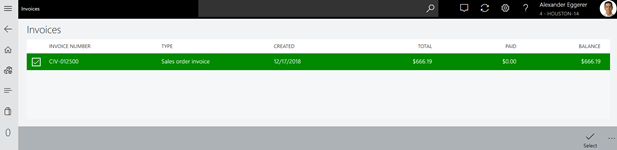

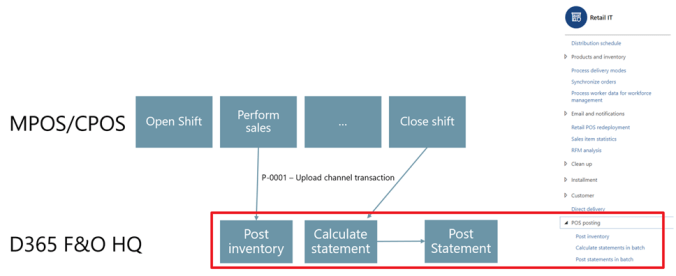

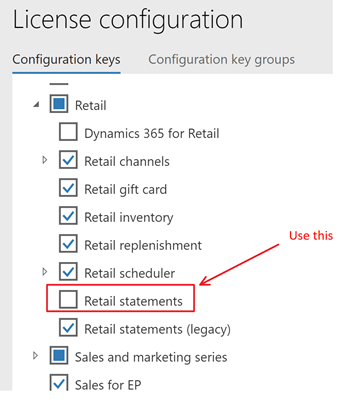

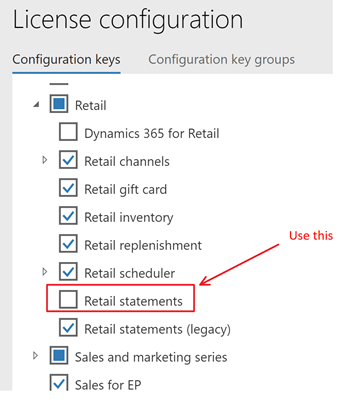

Retail statement (Legacy) and Retail Statement

In version 7.3.2, Microsoft released a new set of functionality for calculating and posting retail statements. You can read more about it her. Microsoft recommend that you use the Retail statements configuration key for the improved statement posting feature, unless you have compelling reasons to use the Retail statements (legacy) configuration key instead. Microsoft will continue to invest in the new and improved statement posting feature, and it’s important that you switch to it at the earliest opportunity to benefit from it. The legacy statement posting feature will be deprecated in a future release.

Access hidden Retail menu items.

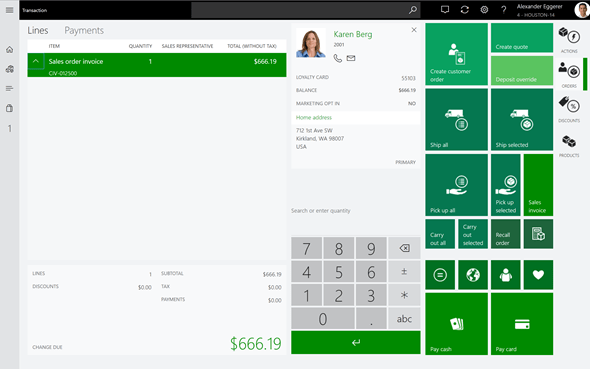

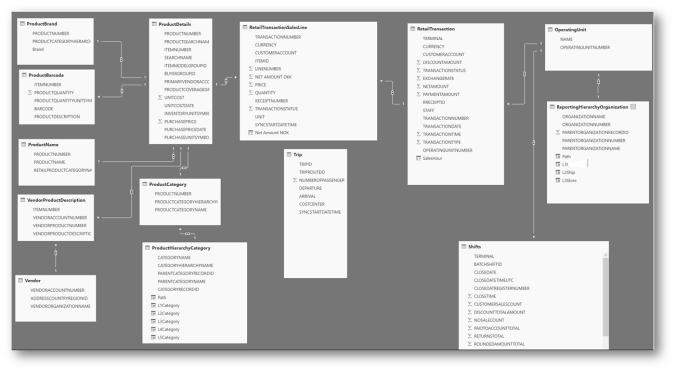

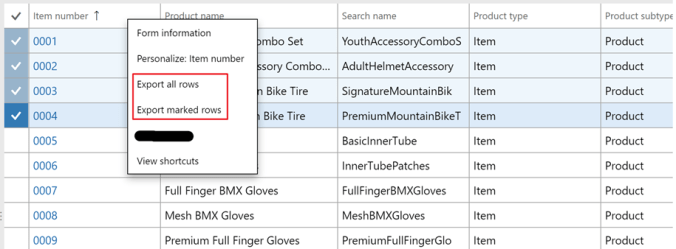

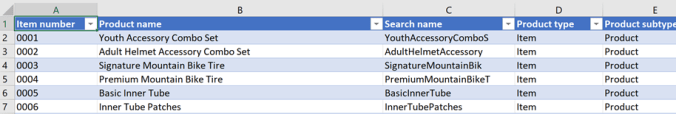

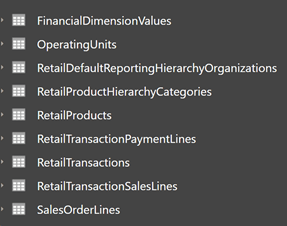

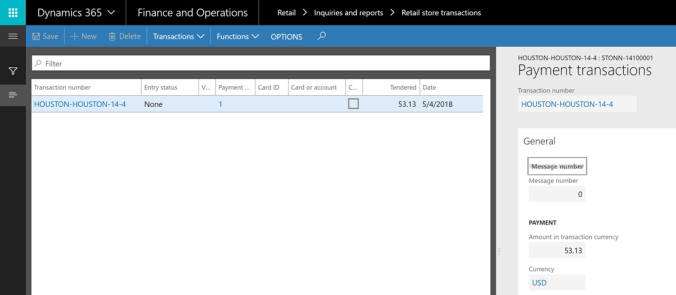

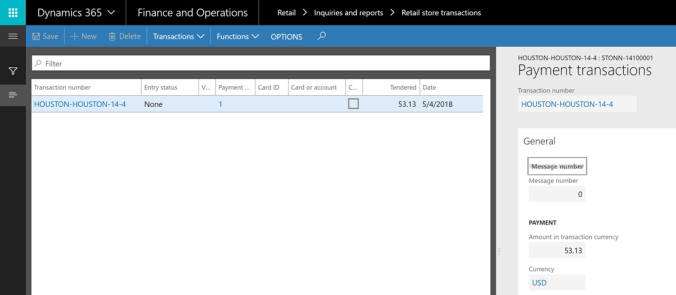

The form “Retail Store transactions” contains all retail transactions that is received from the MPOS/RSSU’s, and here you will find, sales, logins, payments etc. This first step for any user should be to personalize this form, and only show the relevant fields and columns(Not done here).

You can dig deeper into the transactions, by clicking the “Transactions menu”

If I here open the “Payment transactions” I get a filtered view of the payment transactions related to that receipt.

BUT! In many cases you would like to look on ALL the payment transactions, and not only the those related to a specific receipt. But there are no menu items that let’s you see all payment transactions in one form.

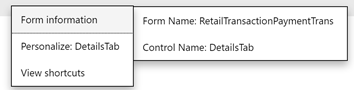

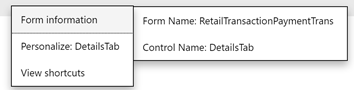

Here is my tip. Right click on the form and then you can see the Form name. Click on that …

And you should be able to see the menu item name.

Then copy your D365FO URL, and replace the menu item name, and open it in another browser tab.

Then you get a nice list of all payment transactions regardless of what receipt is connected to

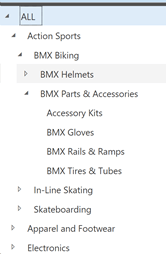

This procedure can be used most places in Dynamics 365. For retail, this is excellent because some times you need to find specific transactions. If you need to reconcile banked transactions (where you have a Bag number), then you can use this approach to see all banked bag numbers in a single form. But here is a list of the most common ones:

| Sales transactions(items) |

&mi=RetailTransactionSalesTrans |

| Payment transactions |

&mi=RetailTransactionPaymentTrans |

| Discount transactions |

&mi=RetailTransactionDiscountTrans |

| Income/Expense transactions |

&mi=RetailTransactionIncomeExpenseTrans |

| Info code transactions |

&mi=RetailTransactionInfocodeTrans |

| Banked declaration transactions |

&mi=RetailTransactionBankedTenderTrans |

| Safe tender transactions |

&mi=RetailTransactionSafeTenderTrans |

| Loyalty card transactions |

&mi=RetailTransactionLoyaltyRewardPointTrans |

| Order/Invoice transactions |

&mi=RetailTransactionOrderInvoiceTrans |

Unit conversion between <unit 1> and <unit 2> does not exist.

If you use Retail Kitting, and have kits with intraclass unit conversions, then there is an issue, that Microsoft is working on. This is scenarios where the included kit line is stocked in pcs and consumed in centiliters. Luckily Microsoft is working on this, and we expect a fix on this.

Wrong date format on the POS receipt.

In EN-US we have the date format MM/DD/YYYY. In Europe we use DD/MM/YYYY. The date format on the receipt is controlled by the language code defined on the store. We often prefer to have EN-US as the language on stores, but this gets wrong date format. Therefore to get the right date format on the receipt, you either have to maintain product names/descriptions in multiple languages (like both EN-US and EN-GB), and specify that the languageon the POS store should be EN-GB. We are working on finding a better and more permanent solution to this.

Dual display.

Microsoft writes: “When a secondary display is configured, the number 2 Windows display is used to show basic information. The purpose of the secondary display is to support independent software vendor (ISV) extension, because out of the box, the secondary display isn’t configurable and shows limited content. ” In short…. You have to create/develop it yourself in the project. This requires a skilled Retail developer that masters RetailSDK, C# and javascript.

Credit Card payment with signature

In certain situations it could happen that the payment terminal is capable of processing the payment, but for some reason this is not closing the “waiting for customer payment”. In most cases this is related to the payment terminal being able to perform offline transactions, and then the payment terminal will print a receipt where the customer must sign. In such cases we have created a separate payment method called “pay with signature”, that is posted in exactly the same way as a credit card payment method. Then the cashier is able to continue the payment processing, and register that the payment was ok, and then print out the receipt.

Something very wrong was done by the cashier, then suspend the transaction

If there for some reason, the cashier is not able to continue on the transaction, the casher have the option of suspending the transaction, and then continue. Then later, the POS experts can resume the transaction, and find out what went wrong.

Setting up MPOS in tablet mode

The MPOS works very nice in tablet mode. But if you have dual display, the PC cannot be put into tablet mode. We have not found a way to fix, and if you know, please share.

MPOS resolution and screen layout does not fit the screen

Do not just set the MPOS resolution to the screen resolution. If there is a “title bar”, you need to subtract that title bar height from the screen layout. This is important in scenarios where you have dual displays.

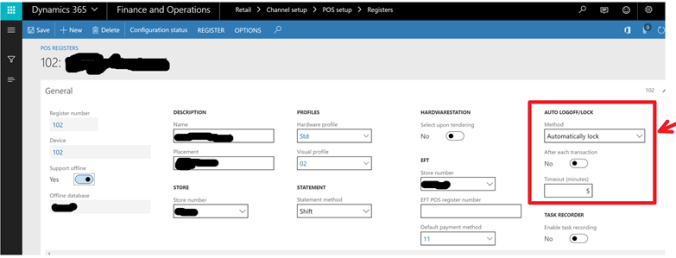

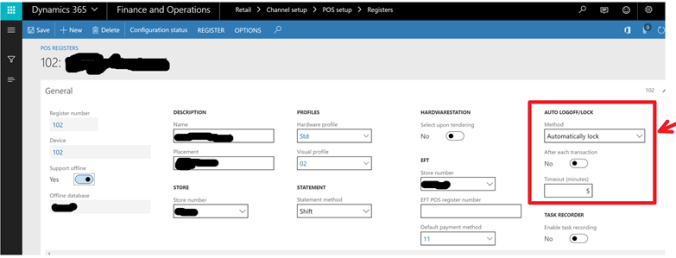

Use lock screen and not log off on the registers.

The log-out/in process to more “costly” from a resource perspective than the lock operation.

Keep the MPOS running (but logged out) when not using the device.

As the Dynamics 365 periodically sends new data to the MPOS offline database, this will be done through the day/night. Then the MPOS is “fit-for-fight” when the user logs in.

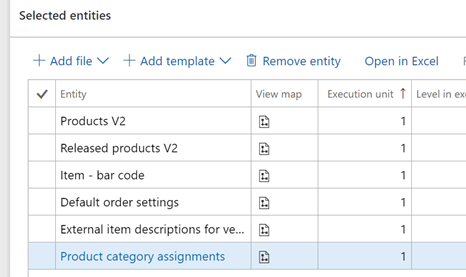

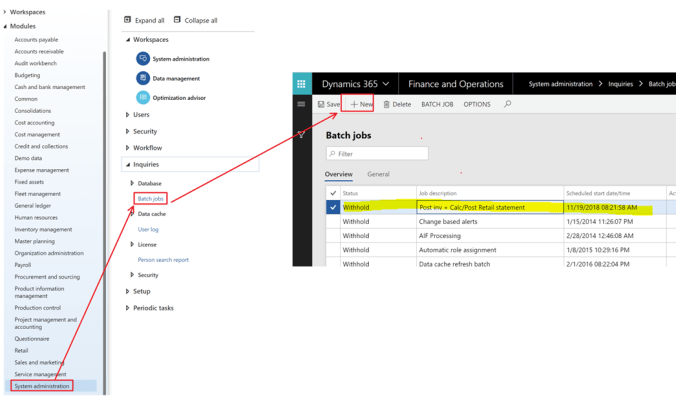

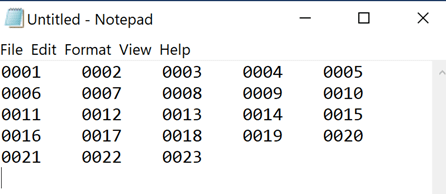

Run Distribution jobs in batch

My guide lines on retail distribution jobs is that all Retail jobs will start with the R-prefix, followed by the number. Download distribution jobs will be R1000-1999. Upload Distribution jobs will be R2000-2999. Processing batch jobs will be R3000-3999. Retail supply chain processes will be named R4000-4999.

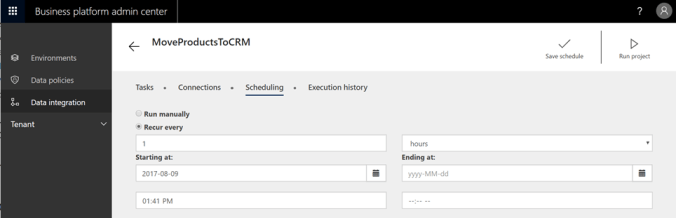

There are a number of jobs distributing data from Dynamics 365 to the store databases (RSSU) and the offline databases. The jobs and suggested recurrence I suggest is

That’s my tips for today. If you have read this completely to the end, I’m VERY impressed, and let me know in the comments.